Overview

Our data loader is ready to batch together our amenity IDs and handle the request to the REST endpoint. It's considered officially registered in our application, but not yet being used anywhere.

In this lesson, we will:

- Update our datafetcher to delegate responsibility to the data loader

Using our data loader

We've taken care of the first two steps in our plan to bring data loaders into our application.

- We created a data loader class.

- We gave our class a

loadmethod.

Now we'll update our datafetcher method for the Listing.amenities field. Rather than calling our ListingService methods directly, it will delegate responsibility to the data loader to gather up, and provide amenity data for, all of the listings in our query.

Step 3 - Updating our datafetcher

Back in datafetchers/ListingDataFetcher, we have a few changes to make.

Let's start with some additional imports we'll need.

import org.dataloader.DataLoader;import java.util.concurrent.CompletableFuture;

Down in our method, let's remove the line that calls out to the ListingService's amenitiesRequest.

@DgsData(parentType = "Listing")public List<Amenity> amenities(DgsDataFetchingEnvironment dfe) throws IOException {ListingModel listing = dfe.getSource();String id = listing.getId();Map<String, Boolean> localContext = dfe.getLocalContext();if (localContext.get("hasAmenityData")) {return listing.getAmenities();}- return listingService.amenitiesRequest(id);}

Now, we'll update our datafetcher to call the data loader instead. We can access any registered data loader (any class that has the @DgsDataLoader annotation applied) in our application by calling the dfe.getDataLoader() method, and providing a name.

if (localContext.get("hasAmenityData")) {return listing.getAmenities();}dfe.getDataLoader("amenities");

This returns a DataLoader type, with two type variables: the first describes the type of data for the identifiers that are passed into the data loader. The second describes the type of data that is returned for each identifier. We're passing in our listing IDs of type String, and for each identifier, we expect to get a List of Amenity types back.

DataLoader<String, List<Amenity>> amenityDataLoader = dfe.getDataLoader("amenities");

Now we can call the load method of our amenityDataLoader, passing in the id of the listing we're resolving amenity data for.

DataLoader<String, List<Amenity>> amenityDataLoader = dfe.getDataLoader("amenities");return amenityDataLoader.load(id);

Pretty quickly we'll see a red squiggly on the new line that we've added. Our IDE is complaining because our method's return type is List<Amenity>, but our data loader's load method returns a type of CompletableFuture<List<Amenity>>.

We can't just update the method's return type to CompletableFuture<List<Amenity>>, because our method has the possibility of returning a List<Amenity> type if that data is already available. To mitigate this mismatch, we'll instead update our method's return type to Object.

@DgsData(parentType = "Listing") {1}public Object amenities(DgsDataFetchingEnvironment dfe) throws IOException {ListingModel listing = dfe.getSource();String id = listing.getId();Map<String, Boolean> localContext = dfe.getLocalContext();if (localContext.get("hasAmenityData")) {return listing.getAmenities();}DataLoader<String, List<Amenity>> amenityDataLoader = dfe.getDataLoader("amenities");return amenityDataLoader.load(id);}

Stepping back, we can see the new flow of this Listing.amenities datafetcher. We still have two paths:

- If we already have access to amenity data (

localContext.get("hasAmenityData")), we can simply return theamenitiesproperty on ourListinginstance. - Otherwise, we need to make a follow-up request for amenity data, our datafetcher will pass the listing

idto the data loader to be batched together in one big request for the amenities of all the listings in our query.

Before, our datafetcher called our data source in a new request for every listingId it received. Now, it passes each listing's id through to our data loader class, which does the work of batching all of the IDs it receives together in a single request.

This lets the datafetcher continue to fulfill its regular responsibilities—namely, being called for each instance of Listing.amenities it's meant to help resolve—without bogging down performance with multiple network calls. When a call across the network becomes necessary, it passes each ID into the data loader, and DGS takes care of the rest!

Running a query

We've made a lot of changes, so let's stop our running server and relaunch it.

Press the play button, or run the following command.

./gradlew bootRun

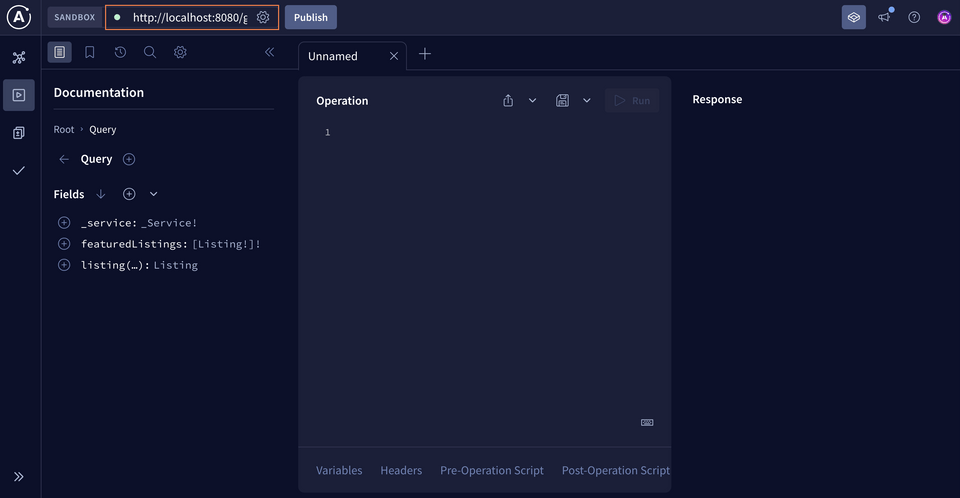

Let's jump back into Sandbox and make sure that our running server is connected.

We'll run the same query as before, keeping our eyes on the application terminal so we can monitor the number of requests being made through the messages we logged.

query GetFeaturedListingsAmenities {featuredListings {idtitleamenities {idnamecategory}}}

When we run the query, we should see a list of listings and their amenities returned.

And we see just one line printed out in our terminal:

Calling the /amenities/listings endpoint with listings [listing-1, listing-2, listing-3]

Our listing IDs have successfully been batched together in a single request! 👏👏👏

Practice

Key takeaways

- To actually use a data loader, we call its

loadmethod in a datafetcher method. - The

DgsDataFetchingEnvironmentparameter available to every datafetcher method includes agetDataLoadermethod. - DGS' built-in data loaders come with caching benefits, and will deduplicate the keys they make requests for.

Congratulations!

And with that, you've done it! You've tackled the n+1 problem—with data loaders in your toolbelt, you have the resources to boost your datafetchers' efficiency. We've taken a basic data fetching strategy in our application, and made it into something that can scale with our queries and features. By employing data loaders, we've seen how we can make fewer, more efficient requests across the network, delivering up data much faster than we ever could have before.

Next up: learn how to grow your GraphQL API with an entirely new domain in Federation with Java & DGS. We'll cover the best practices of building a federated graph, along with the GraphOS tools you'll use to make the path toward robust enterprise APIs smooth, observable, and performant!

Thanks for joining us in this course, and we can't wait to see you in the next.

Share your questions and comments about this lesson

This course is currently in

You'll need a GitHub account to post below. Don't have one? Post in our Odyssey forum instead.