Overview

The router comes equipped to do a lot of its own caching, without any additional configuration required from us. From introspection responses to query plans, the cache accelerates our router's ability to execute queries over and over again.

In this lesson, we will:

- Learn about query plans

- Inspect the query plans the router generates

- Learn how to warm up the cache on schema changes

Query plans

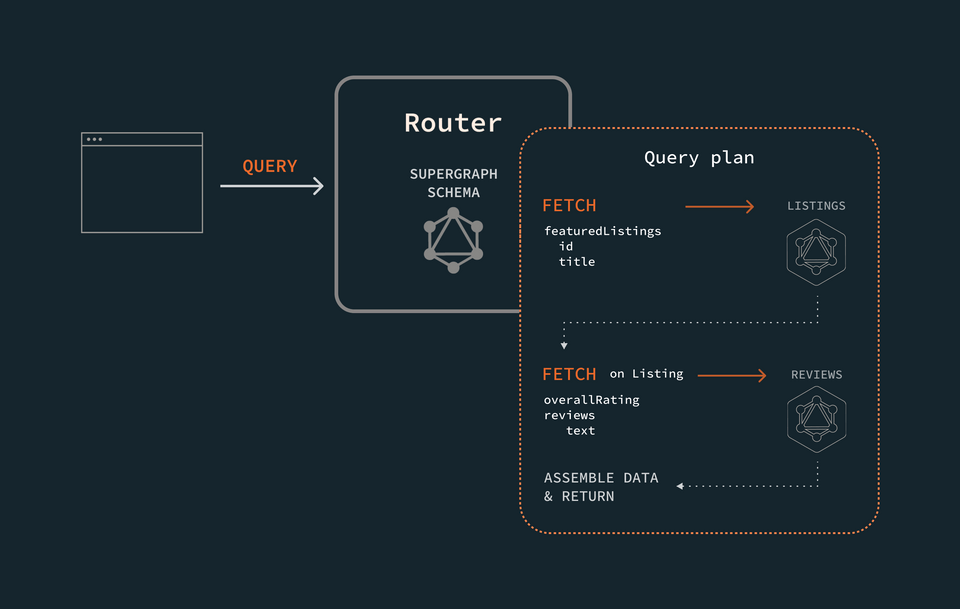

When the router receives a GraphQL operation for the first time, it creates a plan for how to resolve it: the query plan, the set of instructions the router follows to fetch and assemble data from different subgraphs.

Starting from the top-level field of the operation, the router consults the supergraph schema to determine which subgraph is responsible for resolving the field, and starts to build its query plan. The router continues like this, checking each field in the query against the supergraph schema, and adding it to the query plan.

After all the fields are accounted for, the router goes on to execute the plan, making requests to subgraphs in the order outlined in the plan, and assembling the data before returning it back to the client.

Caching query plans

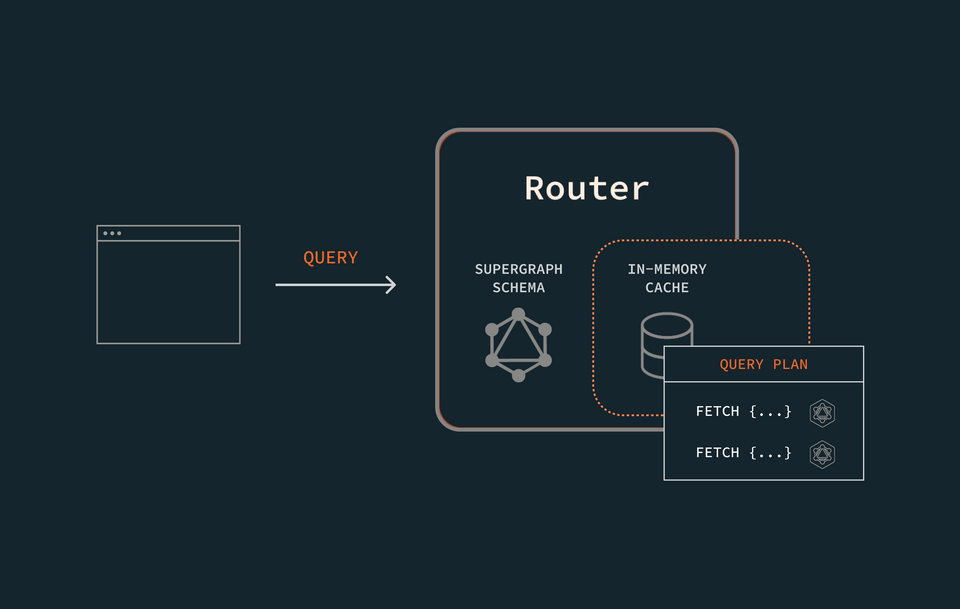

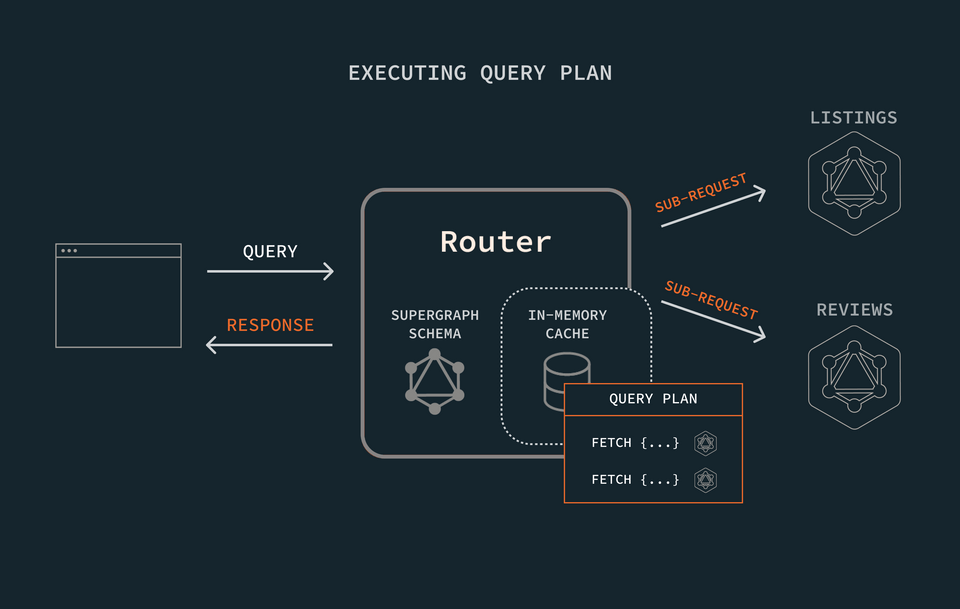

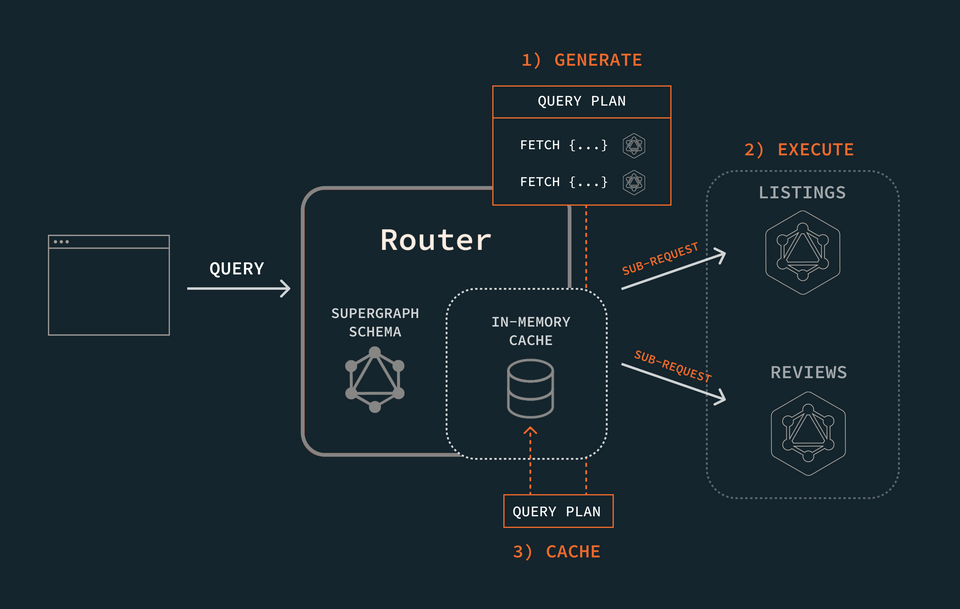

The router uses a query plan for every operation it resolves; but it doesn't necessarily generate the query plan each time. When it receives an operation, the router first consults its own in-memory cache for the corresponding query plan.

If it finds it, then great—clearly, it's done this work before! It plucks the plan from the cache and follows the instructions to resolve the operation.

If the router does not find the query plan, it proceeds with generating it from scratch. The router also stores the plan in its in-memory cache, to be used for next time.

Query plans in action

Alright, let's see this in action! First, let's take a look at the query plan for one of our operations.

Jump into GraphOS Studio Explorer and paste the same operation from the previous lesson into the Operation panel.

query GetFeaturedListings {featuredListings {idtitledescriptionnumOfBeds}}

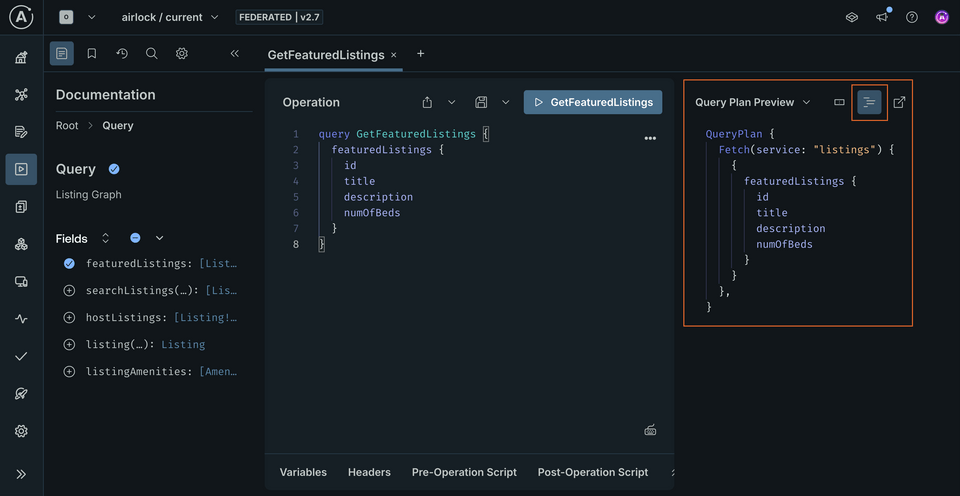

In the Response panel, we'll find a dropdown. Here we can select the option Query Plan Preview. This updates the content of the Response panel with a representation of how the query will be resolved.

There are two options here: we can view the plan as a chart, or as text. Click Show plan as text, as this gives us a little bit more information about what the router does under the hood.

QueryPlan {Fetch(service: "listings") {{featuredListings {idtitledescriptionnumOfBeds}}},}

This query plan looks a lot like our original query, doesn't it? The biggest difference is the Fetch statement we see at the top. This tells us which service—namely, "listings"—the router plans to fetch the following types and fields from. Because our query involves a single service, the router can fetch all of the data in a single request to the listings service.

Now let's look at a query plan that involves both subgraphs. In this case, we'll see that the response from one subgraph depends on the response from another.

In a new Operation tab, paste the following query.

query GetListingAndReviews($listingId: ID!) {listing(id: $listingId) {titledescriptionnumOfBedsoverallRatingreviews {idtext}}}

And in the Variables panel:

{"listingId": "listing-1"}

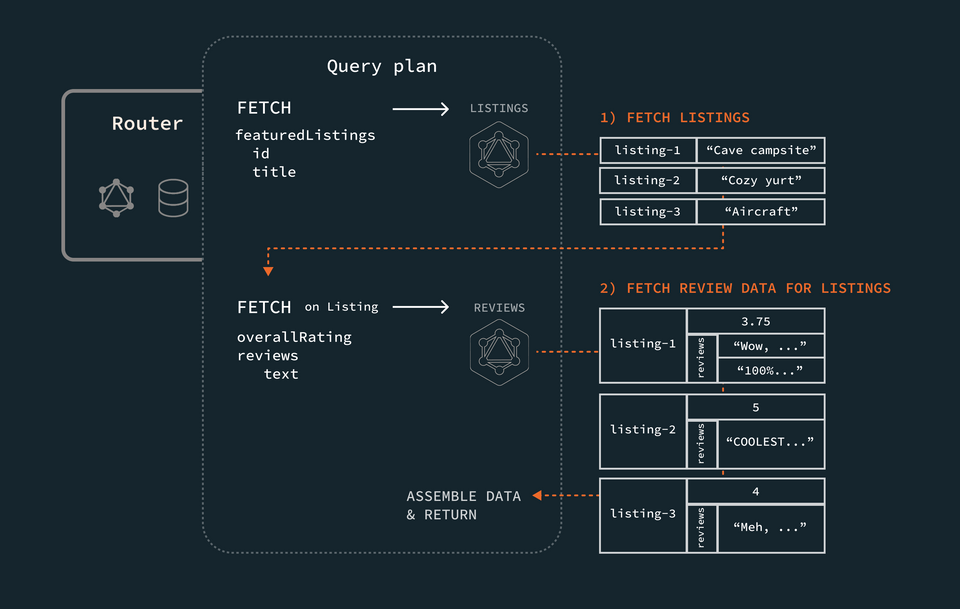

To resolve this query, the router has to coordinate data between the two services. In order for the reviews service to provide the reviews and overallRating fields, it first needs to understand which listing object it's providing data for. We see this reflected in the query plan:

QueryPlan {Sequence {Fetch(service: "listings") {{listing(id: $listingId) {__typenameidtitledescriptionnumOfBeds}}},Flatten(path: "listing") {Fetch(service: "reviews") {{... on Listing {__typenameid}} =>{... on Listing {overallRatingreviews {idtext}}}},},},}

Here the router defines a Sequence of steps. It first conducts a Fetch to the listings service for all the listing-specific types and fields. Next, it conveys the information the reviews subgraph needs to provide data for a particular listing: __typename and id, the Listing entity's primary key field. With this information, the router can then request the corresponding reviews data from the reviews subgraph. All the data is packaged together, and returned!

Note: If the response from the reviews subgraph did not depend on the response from the listings subgraph, the query plan would reflect that the two requests run in Parallel, rather than as part of a Sequence.

Now that we've seen what query plans look like in action, let's take a closer look at the router's side of things.

Query plans & the router

To investigate what the router is doing, we'll need to provide a new flag to our router start command: --log. This lets us specify the level of verbosity we want in our logs from the router. For our purposes, we'll set the level at trace to better follow what the router is doing when it comes to query plans and caching.

APOLLO_KEY=<APOLLO_KEY> \APOLLO_GRAPH_REF=<APOLLO_GRAPH_REF> \./router --config router-config.yml \--log trace

$env:APOLLO_KEY="<APOLLO_KEY>"$env:APOLLO_GRAPH_REF="<APOLLO_GRAPH_REF>"./router --config router-config.yml \--log trace

Note: Check out the official Apollo documentation to review other log level options.

Restart the router using the command given above, substituting in your own APOLLO_KEY and APOLLO_GRAPH_REF values. When we run the command, we'll see our terminal fill up with a lot more output messages from the router.

Back in Explorer, let's run the GetListingAndReviews operation again.

Jumping back to the router terminal logs, we can scroll to find the query plan output. (You can also search for "query plan" in the logs, and pan through until you find the outputted query plan.)

query planSequence { nodes: [Fetch(FetchNode { service_name: "listings",requires: [], variable_usages: ["listingId"],operation: "query GetListingAndReviews__listings__0($listingId:ID!){listing(id:$listingId){__typename id title description numOfBeds}}",// ...etc

Just below this, we can see the more explicit path the router takes to execute its plan. It narrates its first request to the listings subgraph, and logs the response. Then it's able to use this information about the listing entity we're querying to fetch the corresponding data with a new request to the reviews subgraph!

To see more detail about where the router locates its stored query plan, we'll set up a Prometheus dashboard later on. Stay tuned!

Warming up the cache

When we push updates to our schema, it's likely that the query plans for many of our operations will change. We might have migrated fields from one service to another, for instance. This means that when the router loads a new schema, some of its cached query plans might no longer be usable.

To counteract this, the router automatically pre-computes query plans for:

- The most used queries in its cache

- The entire list of persisted queries (more on this in the next lesson!)

To learn more about configuring the number of queries to "warm up" on schema reload, visit the official Apollo documentation.

Practice

Key takeaways

- The router generates a query plan to describe the steps it needs to take to resolve each query it receives.

- Before generating a query plan, the router first checks its in-memory cache for it. If it finds it, it uses the cached query plan instead of generating a new one.

- When the router receives an updated supergraph schema, it automatically pre-computes query plans for the most used queries in its cache before switching to the new schema.

- We can use the router's config file, along with the

warmed_up_querieskey, to define a custom number of query plans to pre-compute.

Up next

There's another category of work that the router takes care of caching in-memory: the hashes for Automatic Persisted Queries (APQ). Let's take a closer look at this in the next lesson.

Share your questions and comments about this lesson

This course is currently in

You'll need a GitHub account to post below. Don't have one? Post in our Odyssey forum instead.