Overview

Let's get our project running—we're simulating a "production" environment after all!

In this lesson, we will:

- Boot up our environment with

docker compose - Review our files and tools

Start up the containers

The repository for this course contains multiple containers that need to run in concert. To boot up all of the containers at once so that they can interact with one another, we'll use the docker compose up command.

First, make sure your Docker daemon is running.

Next, open up a new terminal to the root of your project repository, and run the following command. We've included the -d flag, which runs the composed containers in "detached" mode. This runs our containers as a background process, rather than requiring a dedicated terminal. (We can always check in on the process in our Docker app.)

Pro tip: Before running docker compose, shut down as many other running processes or programs on your computer as you are able to. Running a "production" environment takes a LOT of CPU!

docker compose up -d

As the containers start up, you should see a lot of output in the terminal. Docker is fetching all of the dependencies needed to launch the containers—this can take a few minutes to complete!

The editor environment

When the process is complete, we can open up http://localhost:8080 to access a hosted editor environment.

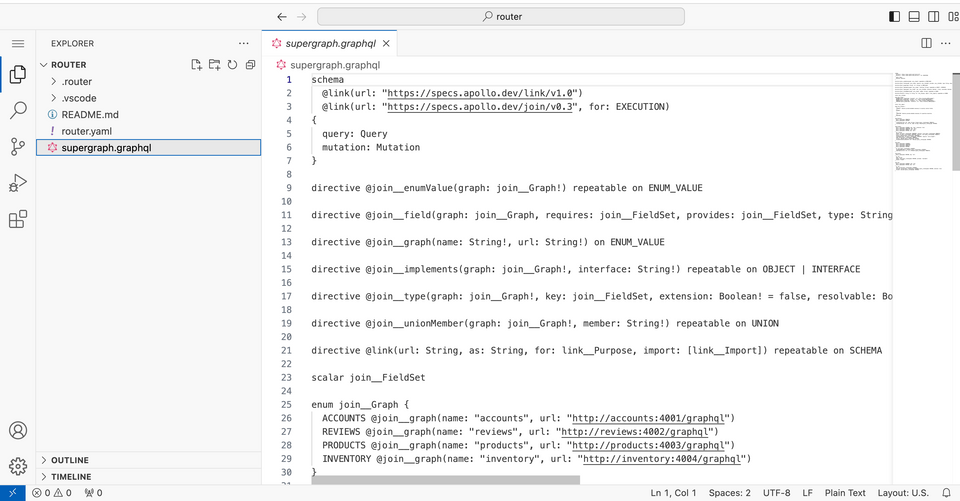

There are a few files here that we'll work with: supergraph.graphql and router.yaml.

In supergraph.graphql, we'll find our entire supergraph schema: the one comprehensive schema document that results from composing all of our smaller subgraph schemas together. We won't need to make any changes to this document as part of the course, but it's useful for referencing which subgraphs are responsible for each type and field.

Next, we'll find the router.yaml file. This is the configuration file for the router.

supergraph:introspection: truelisten: 0.0.0.0:4000query_planning:cache:in_memory:limit: 10# ... other configuration

We'll work directly in this file to modify how the router is running as part of our performance investigation.

Observability with Grafana

As we test our API, we'll use a tool called Grafana. Grafana is an observability tool that lets us build dashboards that monitor how systems are performing and what events are occurring. Best of all, it's already connected to our running router—we can access it at http://localhost:3000.

By default, we'll see getting started landing page.

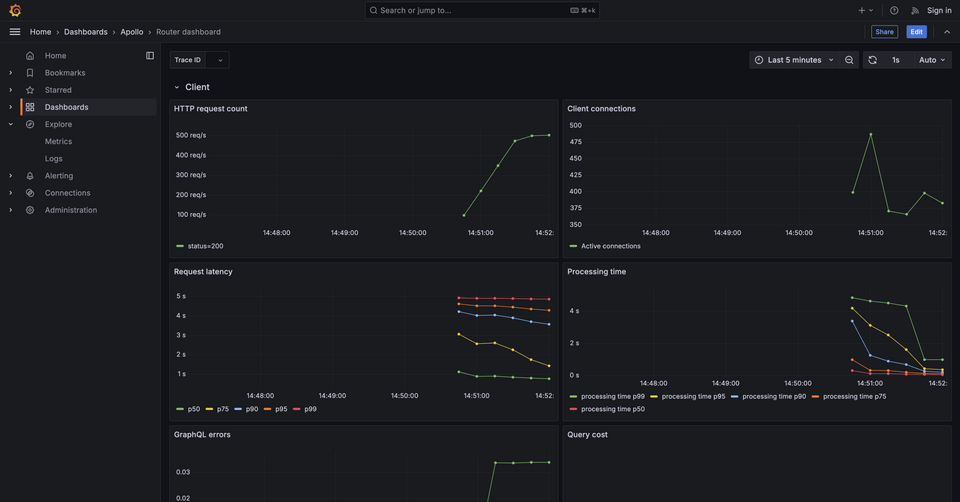

We can jump right into our router's dashboard by navigating in the left-hand menu to Dashboards, Apollo, Router dashboard. (Alternatively, just click this link!)

Note: If you're seeing erratic data in the dashboard from the start (extremely high latency, failed requests), try changing the number of requests being sent per minute to the graph. You can do that by opening the docker-compose.yaml file and modifying the value passed for -rate under the vegeta service, on line 171.

Using Grafana, we'll focus on three aspects of observability: metrics, logs, and tracing.

- Metrics: Datapoints collected over time (such as response time, error rates) that provides insight into how our systems are performing

- Logs: Records of events that have occurred in a system, usually consisting of timestamp and text that details what happened and when

- Traces: Details of a request's journey through a system, allowing us to pinpoint where an error or issue might be occurring

Key takeaways

- The metrics from all of our services are hooked up in Grafana, a data observability tool that lets us track various measurements over time.

- Metrics, logs, and traces are three components of observability that let us determine how well a system is performing.

Up next

With our environment live, we're ready to jump in and start identifying our performance bottlenecks. Let's get to it!

Share your questions and comments about this lesson

This course is currently in

You'll need a GitHub account to post below. Don't have one? Post in our Odyssey forum instead.