Overview

We've looked at configuration on the part of limiting inbound traffic to the router; now let's shift gears and set some controls at the subgraph level.

In this lesson, we will:

- Apply subgraph-level timeouts and rate limiting

Subgraph performance

When building our API using GraphQL federation, different components can end up with varying performance profiles.

One or two subgraphs could be responsible for top-level operations (defining commonly-used fields on the Query type for instance), and as a result would need high reliability. Even so, downstream subgraphs could receive a lot more traffic due to the query amplification effect (such as requesting the reviews for each product, and the account details of the user who wrote each review).

When a query requires data from multiple subgraphs, a failure in one service does not cause a failure in the entire operation. Instead, GraphQL comes with the benefit of partial responses. This gives us the flexibility to limit traffic at the level of a particular subgraph—we can test out receiving partial responses from more responsive subgraphs, while lowering latency across the board.

Let's return to our router.yaml configuration file. First of all, we'll reset our router with a timeout of 1s, and capacity and interval of 500 and 1s respectively.

traffic_shaping:router:timeout: 1sglobal_rate_limit:capacity: 500interval: 1s

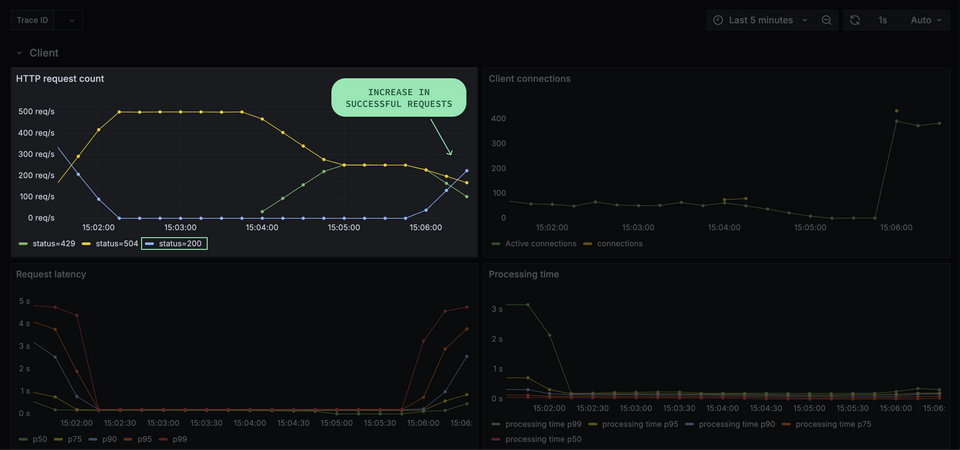

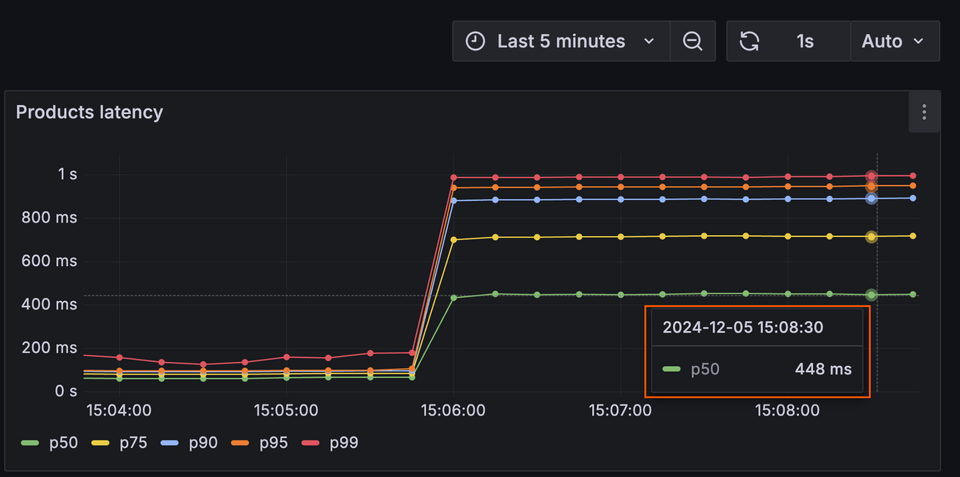

Going back to our Grafana dashboard, we'll see a noticeable effect.

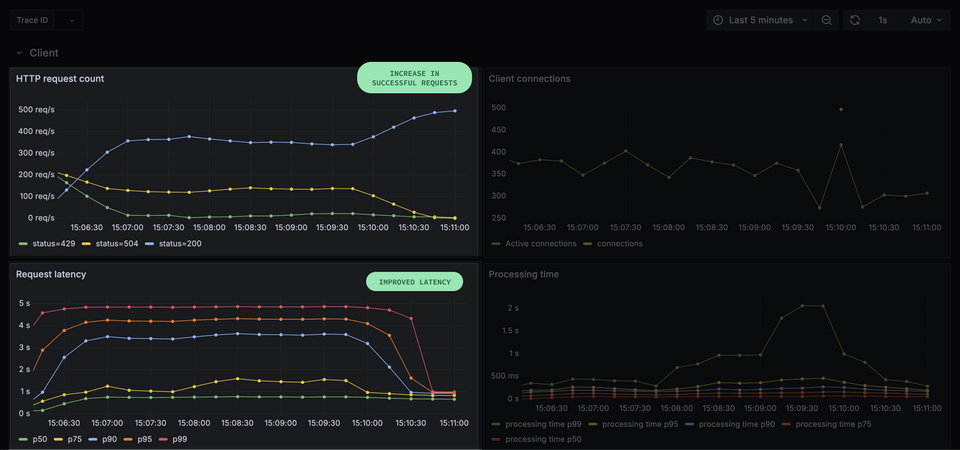

First of all, a longer timeout allows more client requests to succeed with a 200 status code.

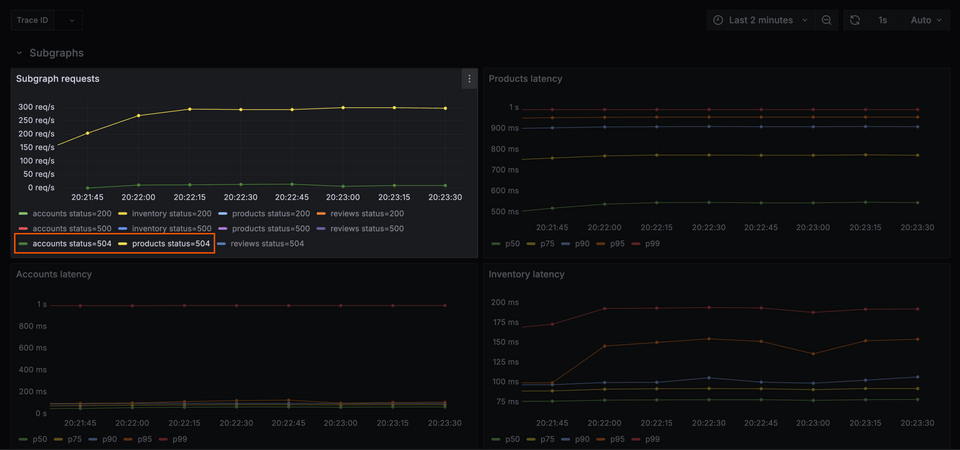

This means that more requests will pass on successfully to the subgraphs; and we can see this reflected in our Subgraph requests panel, where the number of requests has increased.

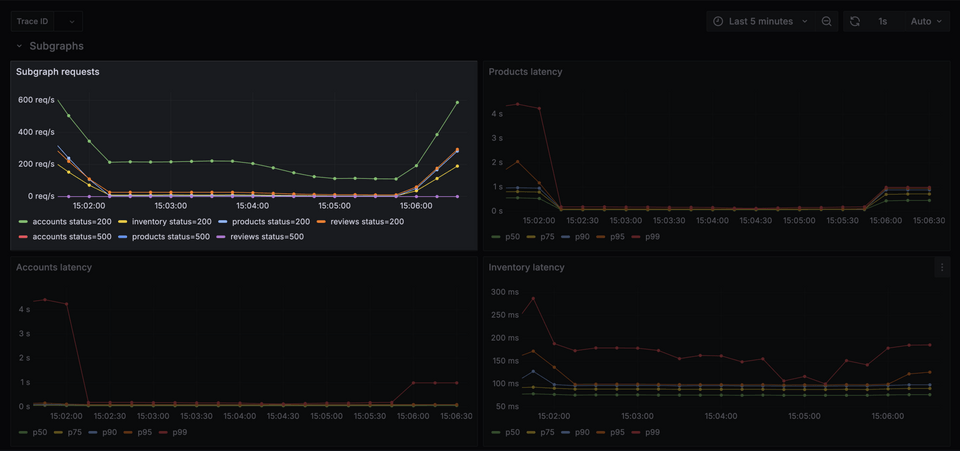

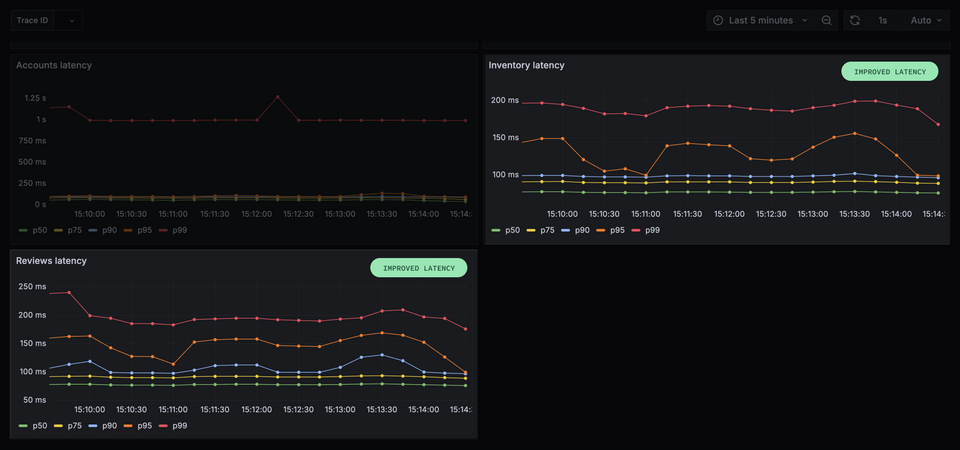

Consider the metrics for each of our four subgraphs.

What we should see is that both reviews and inventory have reasonable request latency: each should have a p99 below 150ms. (With 99% of requests resolving in 150 milliseconds or less.)

The accounts subgraph, on the other hand, shows a p99 latency of one second at about 870 requests per second.

And products has a p99 latency of one second at 400 requests per second. (The other percentiles are quite high too! This means that the majority of requests are taking much longer to resolve—with 50% taking close to 500 milliseconds or less.)

This gives us a good place to start; accounts and products are both good candidates for timeouts and rate limiting.

Subgraph level timeout

To tweak these settings for our subgraphs, we can once again return to our router.yaml configuration file. Under traffic_shaping, we'll add a new property, all, at the same indentation level as the router key.

traffic_shaping:router:timeout: 1sglobal_rate_limit:capacity: 500interval: 1sall:

The settings that we apply under all will affect all subgraphs. Let's go ahead and set a global timeout for all of our subgraphs by adding a new timeout property, and a value of 500ms.

traffic_shaping:router:timeout: 1sglobal_rate_limit:capacity: 500interval: 1sall:timeout: 500ms

As of now, all of our subgraphs will officially have a timeout of 500 milliseconds. But we can get more granular and tweak them one-by-one as needed—by adding a subgraphs property next. We'll add this under the all section, but at the same level of indentation.

traffic_shaping:router:timeout: 1sglobal_rate_limit:capacity: 500interval: 1sall:timeout: 500mssubgraphs:

Under subgraphs we can identify specific subgraphs and give them more precise timeouts as needed. Let's add an accounts key, and give this service a timeout of 100ms.

traffic_shaping:router:timeout: 1sglobal_rate_limit:capacity: 500interval: 1sall:timeout: 500mssubgraphs:accounts:timeout: 100ms

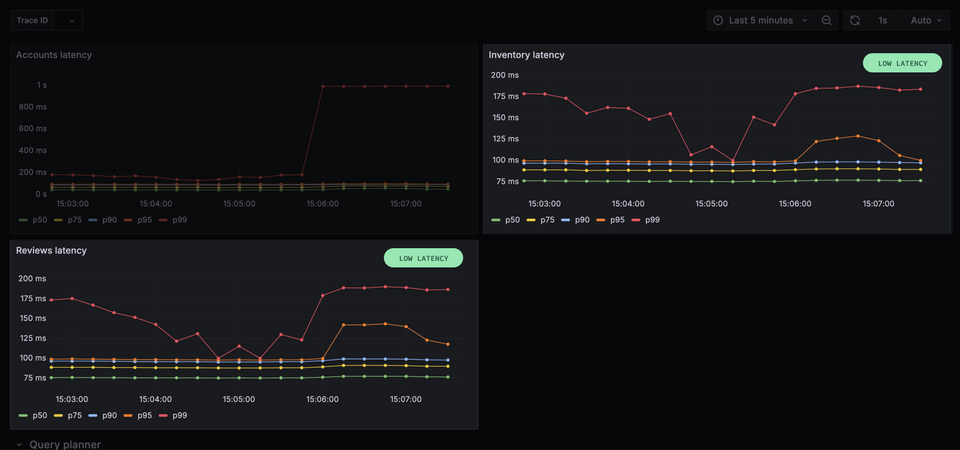

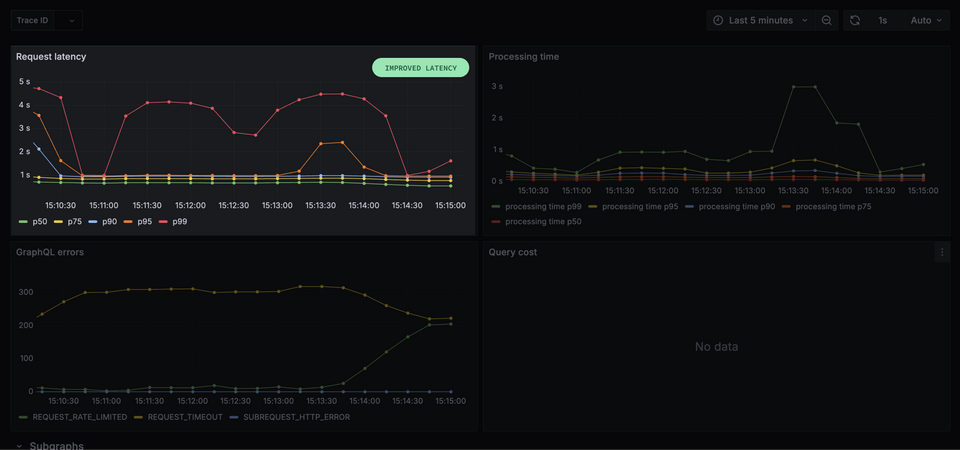

We now observe an effect on client requests. Overall latency is dropping, but still quite high.

But we get a curious result on subgraph requests:

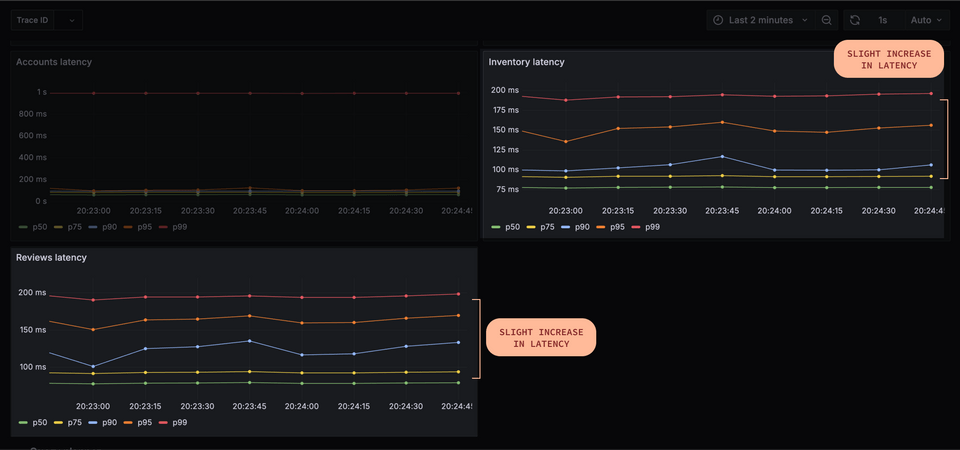

While accounts and products subgraphs are rejecting more requests (504 status code), latency is actually increasing for the reviews and inventory subgraphs.

Take some time to investigate why this is happening. Test various values for timeout, see what effect they can have. Check the latency of various queries. Are you noticing anything?

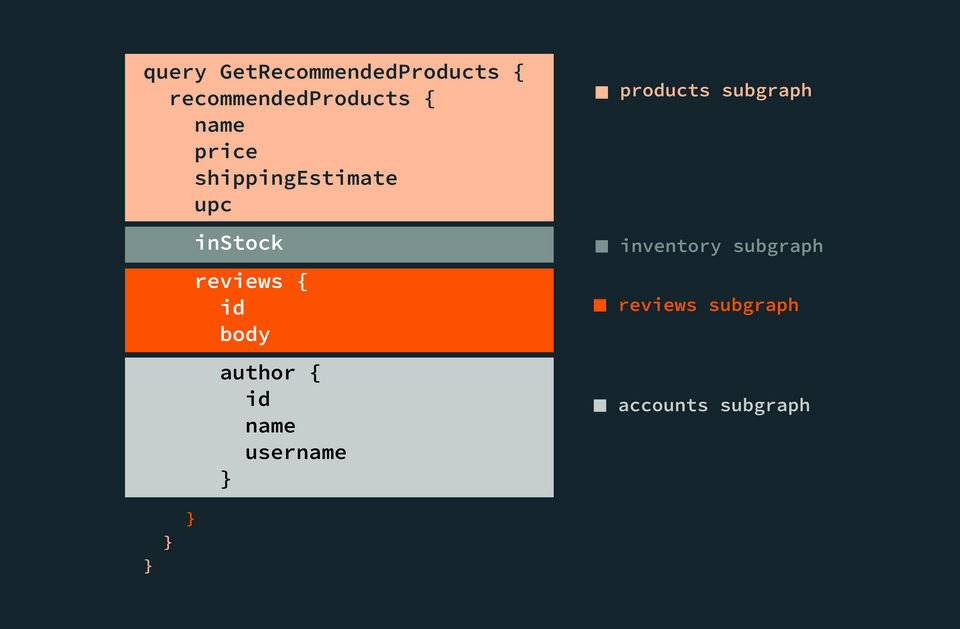

Most of the queries we use here are requesting data from multiple subgraphs in a row, so changing the performance profile of one subgraph will affect others. Let's take the following query:

query GetRecommendedProducts {recommendedProducts {namepriceshippingEstimateupcinStockreviews {idbodyauthor {idnameusername}}}}

Broken down by subgraph responsibility, we can see the query consists of fields spread across our API.

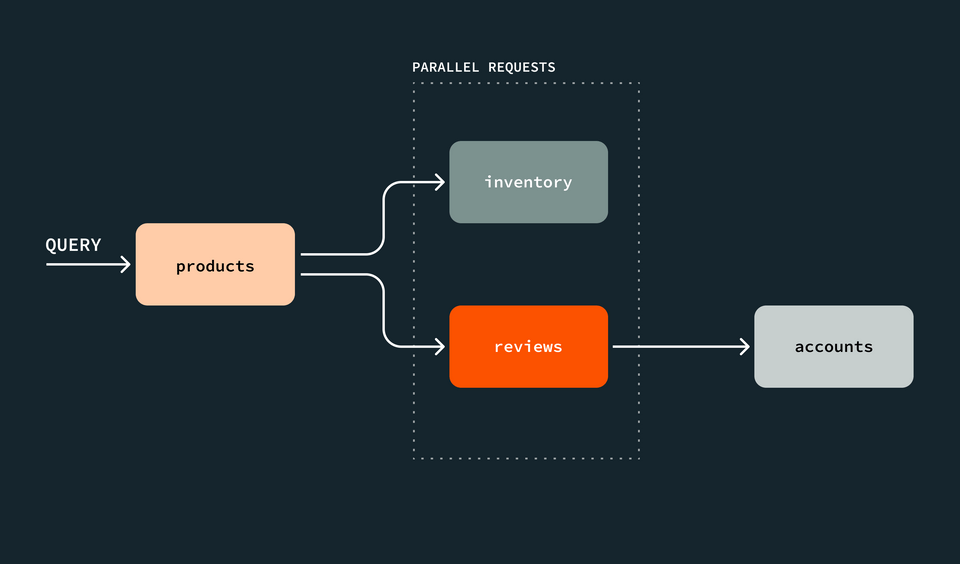

This query will go first through the products subgraph, then in parallel through inventory (for the inStock field) and reviews, then the accounts subgraph for the author details.

The benchmarking client is executing that query (along with others) in a loop, so when it finishes, it sends it again. If the accounts subgraph fails due to the timeout, the client will retry the query sooner. This means it begins the trip back through products again, then immediately to reviews and inventory, slightly increasing their traffic. If those requests are expensive for those subgraphs, that load can be enough to increase their latency.

This is an effect that can be observed in many GraphQL Federation deployments: subgraphs are not isolated from each other, and can affect each other's performance.

And it's possible to see this effect even without timeouts. Let's say a subgraph is often called in the middle of complex query, but is slow to respond, to the point where clients tend to abandon the query. Improving that subgraph's performance might mean that client gets the response faster, but then other subgraphs called down the line would see an increase in traffic that they are not ready for.

Here we suffer from a similar issue to the client-side traffic shaping: when applying timeouts in isolation, all of the queries are still reaching the subgraphs. We may need to use rate limiting along with timeouts to better control the traffic.

Subgraph level rate limiting

We will use the same traffic shaping options, but this time we will set a rate limit of 500 requests per second for accounts:

traffic_shaping:router:timeout: 1sglobal_rate_limit:capacity: 500interval: 1sall:timeout: 500mssubgraphs:accounts:timeout: 100msglobal_rate_limit:capacity: 500interval: 1s

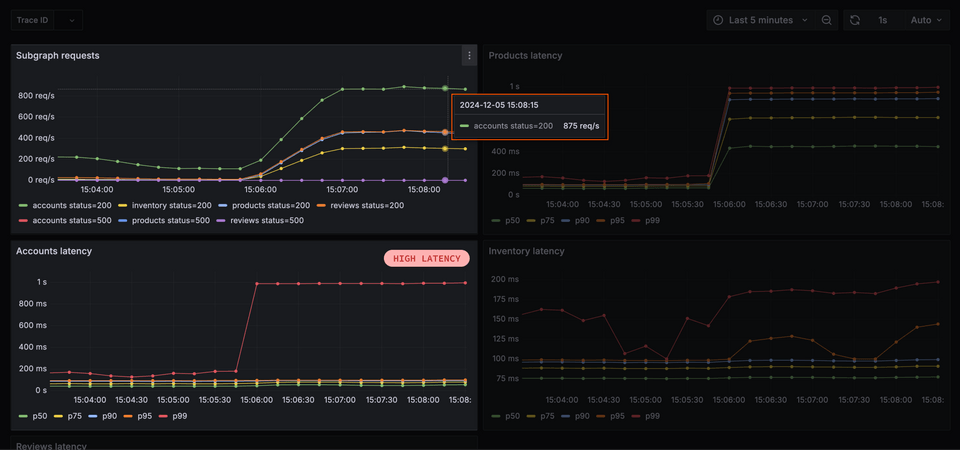

Latency in reviews and inventory immediately drops, so this helped!

And p99 latency for client requests, which was quite volatile between 2s and 4s, is now stabilizing near 1s.

We are finally starting to get a handle on this infrastructure. Limiting traffic on the right subgraph improves overall performance for the entire deployment, at the cost of missing data in some client responses.

Practice

Drag items from this box to the blanks above

isolated

accountsnot isolated

allcannot

can

globalsubgraphs

Key takeaways

- We can use the router's traffic shaping configuration to limit traffic at a subgraph level.

- Any settings we apply at the

alllevel will apply to all subgraphs the router communicates with. - We can use the

subgraphskey to specify particular timeout and rate limit values for individual subgraph services.

Up next

Client requests are now getting a stable latency, but at one second, it's still not great. Coming up next, we'll get a huge performance boost with query deduplication.

Share your questions and comments about this lesson

This course is currently in

You'll need a GitHub account to post below. Don't have one? Post in our Odyssey forum instead.