Overview

Let's draw some conclusions from the data we gathered, and start addressing the problems in our system.

In this lesson, we will:

- Reduce inbound traffic with rate limiting and timeouts

Revisiting requests per second

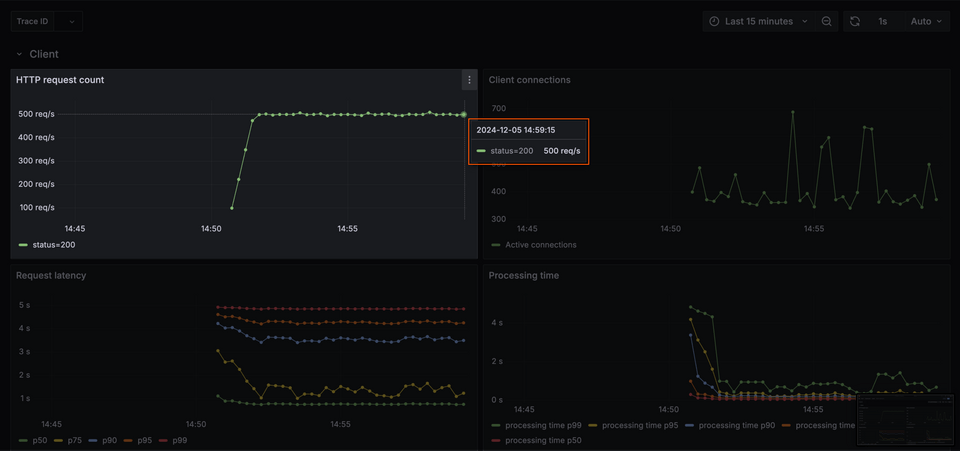

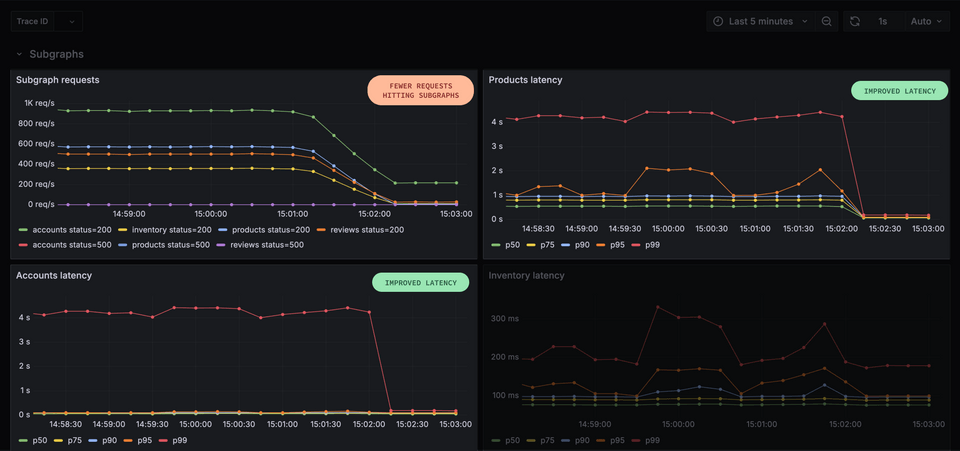

Let's review our key datapoints. The first is the average number of requests per second, which we see reflected in our HTTP request count panel. That's around 500 per second.

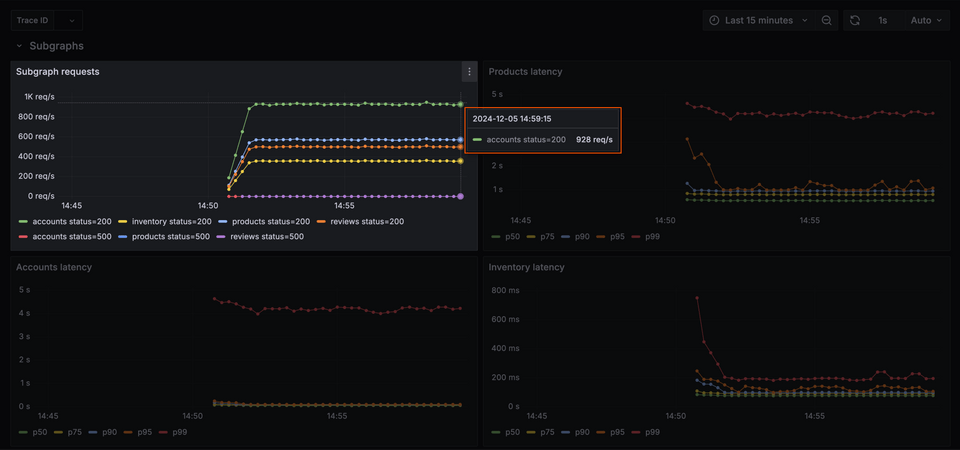

When we compare the total requests received from clients with the total requests handled by our subgraphs per seconds, we'll see that our numbers are a little bit off. Our accounts subgraph alone, for example, is handling way more than clients are even sending—close to 1000 per second!

What's going on here?

Traffic from router to subgraph

How can it be that our subgraphs are receiving more requests than clients are even sending?

What we're seeing is the amplification effect of federated queries: a client can make a single request to the router, which then executes and manages a series of smaller requests to backend services. This is really helpful for the client; it gets to send all of its queries to the router, rather than managing multiple calls to multiple services.

Unfortunately, it means that subgraphs can suffer from the increased traffic. Furthermore, it's often hard to predict the load our subgraphs can expect to receive in advance; new queries can pop up, and schema updates from other subgraphs can affect how queries end up being executed.

The router's main job is to be a proxy for these requests. By default, it will happily accept as many requests as it can, and delegate smaller requests to subgraphs (even if they can't handle the load!) But with a little configuration, we can use the router to protect the subgraphs from too much traffic, as well as make some guarantees about the traffic they receive.

Limiting inbound traffic

Let's set aside our search for the precise sources of latency for a moment. For a quick fix, we could start by reducing client traffic to levels that our infrastructure can actually handle. The router's traffic shaping feature is the tool for the job.

We'll return to our built-in editor at http://localhost:8080 and open up the router.yaml configuration file.

The first thing we can do here is put a hard limit on time spent per request. We'll add a new top-level property to our configuration file called traffic_shaping. Just beneath, define the nested properties router and timeout. Here, we can provide a timeout value of 100ms.

traffic_shaping:router:timeout: 100ms

This timeout value of 100 milliseconds is applied to all requests involving the router. This includes any requests a client makes to the router; any requests the router makes to subgraphs; and any initial requests a subgraph makes to the router as part of the subscription callback setup. Check out the official documentation on the router's traffic shaping feature to learn more.

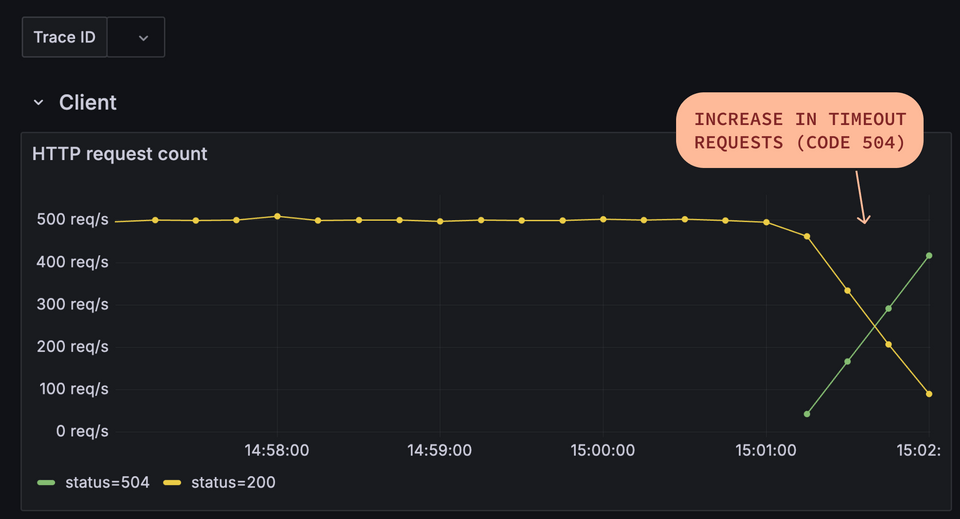

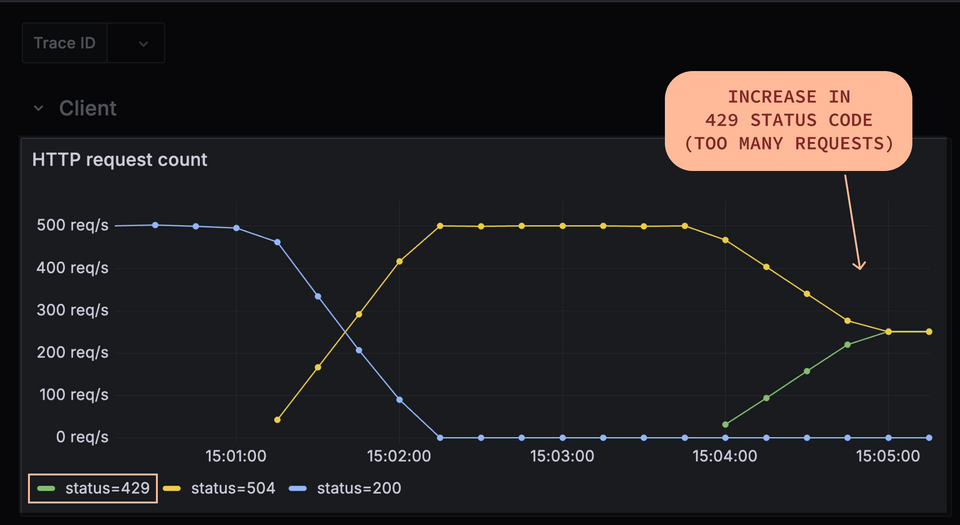

When we save the file, the router will reload the new configuration while continuing to handle existing traffic. But pretty quickly we'll start to see our number of successful requests (code 200) begin to dip; and timeout requests (code 504) begin to climb.

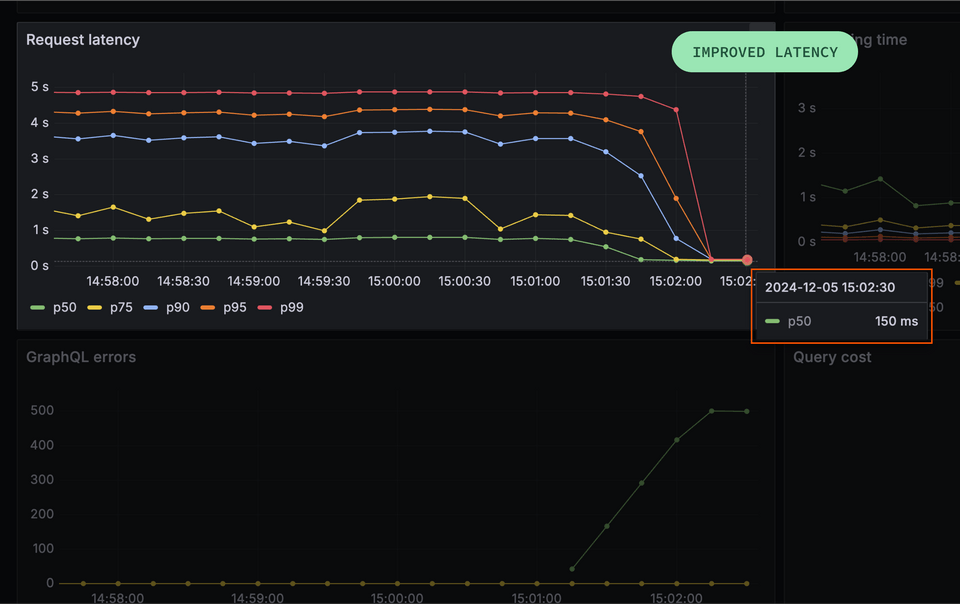

With this change, we'll also see that the client request latency rate does drop. This looks good, but of course it's happening because those long, intensive requests are now timing out. They're no longer impacting our latency, but they're also no longer getting through.

Wondering why our latency is higher than the 100ms we set? We'll discuss this further in Lesson 8: Query Planning!

So, what's the result of making this change? Well, we've definitely made things worse for our clients. Instead of waiting a long time for data, they get timeout errors and no data. But the positive side of this change is that now our subgraph services are not being overloaded with requests.

We'll see from inspecting our metrics that while the total number of client requests remains the same, the number of subgraph requests drops. Due to our timeout setting, the router is now aborting query execution before all of the subgraph requests have been completed. The requests take too long—so they're abandoned!

Rate limiting

Even if we're seeing improved latency rates (because longer requests are being abandoned), another problem area to tackle might be a request rate that's too high for our infrastructure.

With our production environment running locally, one solution might be going out to buy a powerful laptop—but a quicker solution would be to reduce the rate of requests entirely.

To do this, we'll build upon our router's existing traffic shaping config. Beneath our timeout property, we'll add a new property called global_rate_limit.

traffic_shaping:router:timeout: 100msglobal_rate_limit:

Nested under global_rate_limit, we need to define two properties: capacity and interval.

With capacity, we define the maximum number of requests we want to allow within some interval of time. Let's set a maximum value of 250 requests.

traffic_shaping:router:timeout: 100msglobal_rate_limit:capacity: 250

We'll use our next property, interval, to specify the interval of time we want our number of requests to be limited to. We'll set this value to 1s.

traffic_shaping:router:timeout: 100msglobal_rate_limit:capacity: 250interval: 1s

Let's save our file, and wait for the new configuration to load in our environment. Back in our Grafana dashboard, we should start to see changes.

Now, about half of the traffic from clients is rejected with the status code 429 (too many requests).

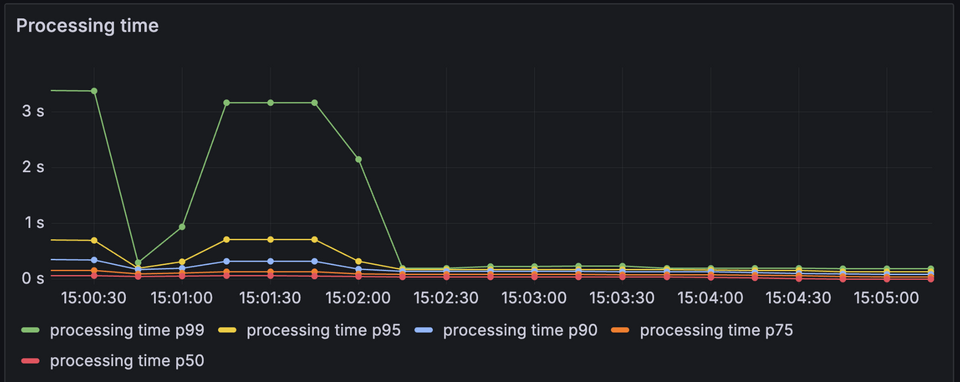

As a result, our total router processing time has also dropped. Instead of beginning to execute queries and then aborting, the router rejects them outright earlier in the process. This results in less work for both the router and the subgraphs, which now have less total traffic to deal with.

Both of these tools (request timeouts and rate limiting) are helpful options when we need to protect the router and subgraphs from too much inbound traffic; but unless we're careful, we end up trading one poor experience for another. Here we can see that while we've addressed the load on our services, client experience has suffered greatly with more errors than ever before. Fortunately, we have some additional tooling to explore that can help us with this—on the subgraph side of our system.

Practice

Key takeaways

- A single client request to the router can result in many more smaller requests to our backend services. This can result in an increase in traffic to subgraph services, above and beyond the number of client requests sent to the router.

- To limit inbound traffic to the router, we can use the

traffic_shapingproperty in the configuration file.- We have two primary mechanisms to shape the traffic that the router receives: timeouts and rate limiting.

- With rate limiting we can set a maximum number of requests we wish to permit within some interval of time.

- Rate limiting and timeouts can protect the router and underlying subgraph services from too much traffic, but they should be deployed with care to keep from degrading client experiences.

Up next

In the next lesson, we'll shift gears and explore more granular traffic shaping for our subgraphs.

Share your questions and comments about this lesson

This course is currently in

You'll need a GitHub account to post below. Don't have one? Post in our Odyssey forum instead.