Overview

We have a few other tools we can reach for to understand what's happening in a system. Traces and logs come in handy when we want a play-by-play of what might be going wrong.

In this lesson, we will:

- Explore traces and spans

- Learn how to access the router's logs

Traces

Traces are another good way of exploring what's going on in our system. We can access them by navigating to Explore in the left-hand menu, or by clicking this link.

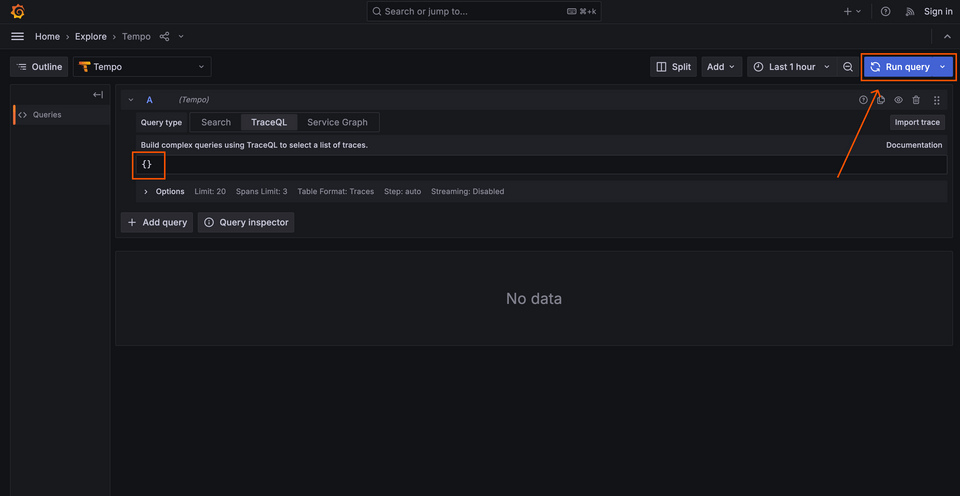

By default, this will bring us to an interface with Tempo selected from the main dropdown. Below this, we'll see an input where we'll enter in opening and closing curly braces ({}) to show all traces.

Next, click the blue Run query button to get our results.

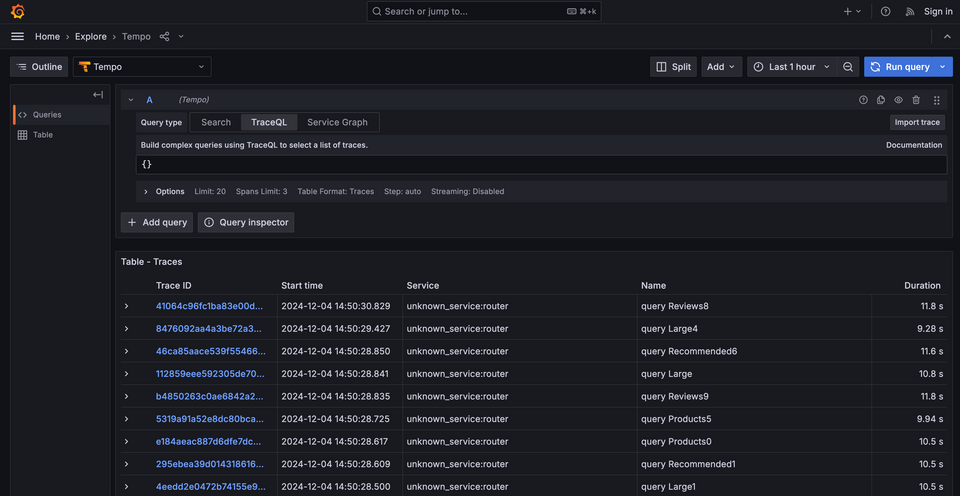

Let's look closer at one of our traces. Click directly on one of the values in the Trace ID column. (Look for one which unknown_service:router under the Service column.) This opens up another panel which lets us take a closer look at this particular trace. Alternatively, you can right-click on the Trace ID value and open the data in a new tab.

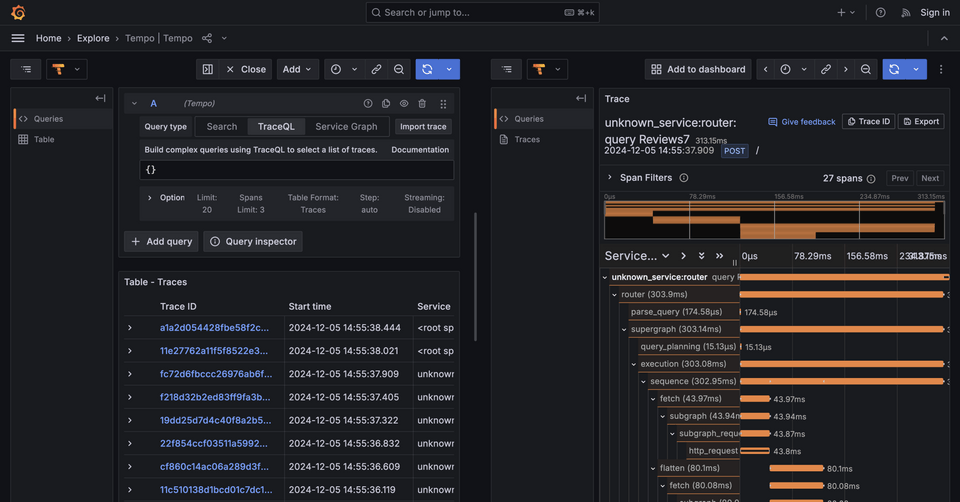

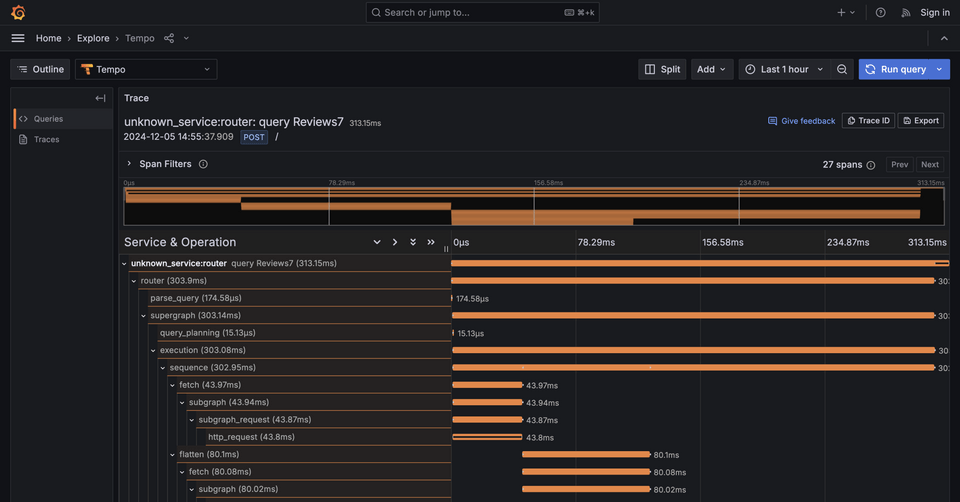

A trace is a way to represent the execution of a query, and the various systems involved in the process. The top level line, called a "span", represents the time spent by the initial request in the system. Spans can correspond to different parts of a single service (the router by itself generates many!) or different services.

In this example, the top-level span is labeled query Reviews7. We can see that it executed in 313.15ms.

Just beneath it, there's a router span with a slightly shorter duration, 303.9ms. This tells us that close to the total execution time was spent in the router span and its children. And just beneath router we can further explore its child spans (such as supergraph).

By looking at which spans take the most time, or considering the gaps between a parent and child span, we can infer where the query has spent most of its execution time. This can help point us in the direction of a bottleneck in a service, or a particularly expensive part of the code being executed.

Spans can also indicate where an error was raised, allowing us to identify the source of the problem even more quickly. We can see that some spans take a long time to execute—in particular, the spans for query execution. This span records the point in execution when the router calls into the subgraph services. If our subgraph services were set up to record traces as well, we'd see those reflected here as children spans.

So if the subgraphs are taking a long time to execute, perhaps we'll find the source of our latency there? Possibly—but we're not done digging yet! Let's explore logs.

Exploring logs

It's common to see erratic behavior when a service is overloaded. This can result in the service failing to handle some requests, throwing errors, or becoming unresponsive. We can get a better idea of what's going on under the hood by exploring our logs.

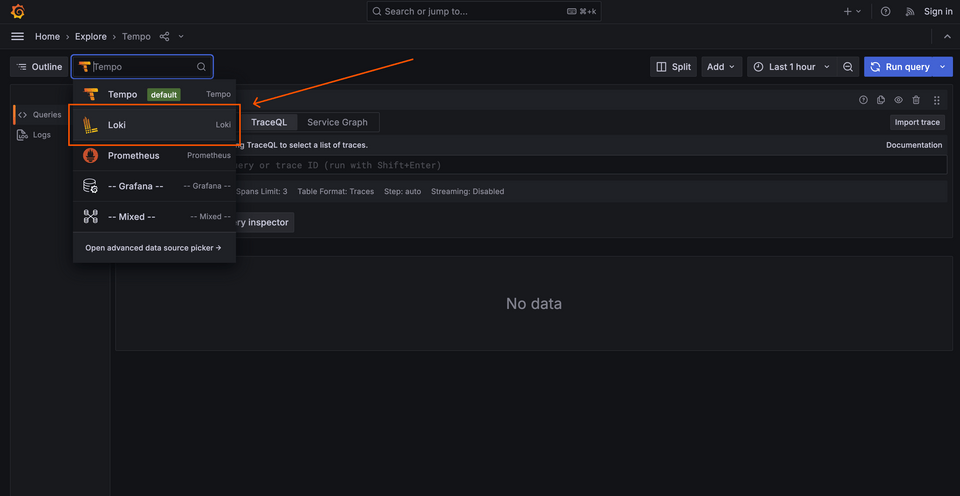

We can access the router logs on the "Explore" page in Grafana as well. Let's click the menu where Tempo is specified, and change the option to Loki, to match the service we're using in our Docker process to collect router logs.

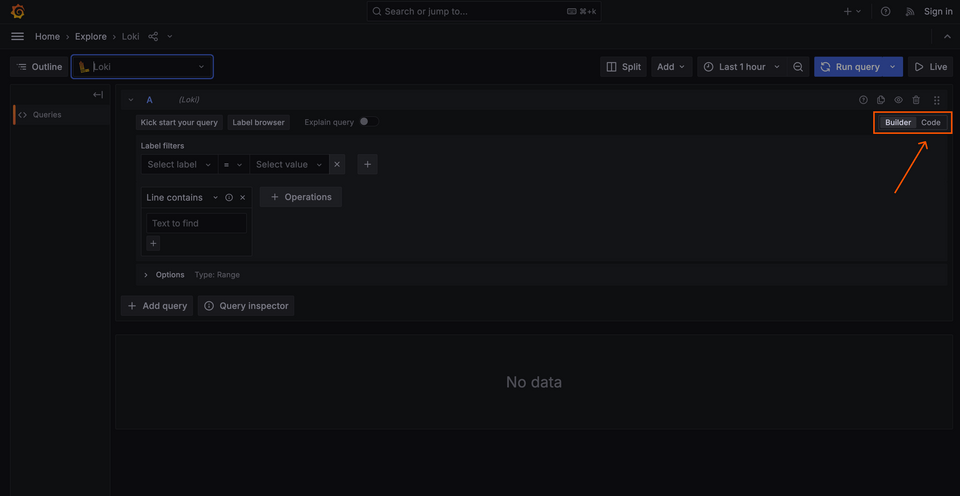

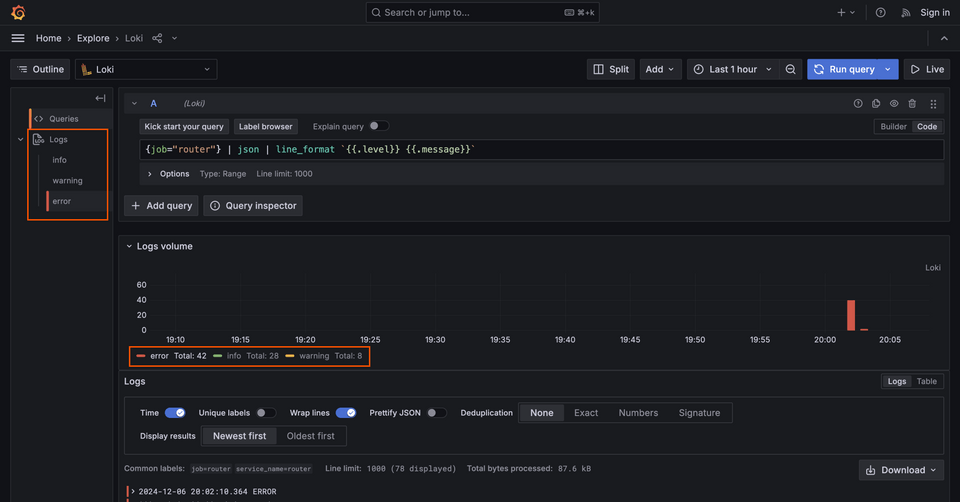

We'll see the interface update with the Loki query builder. Rather than construct our query using their prebuilt parameters, we'll switch our mode from Builder to Code. You'll find this option on the far right.

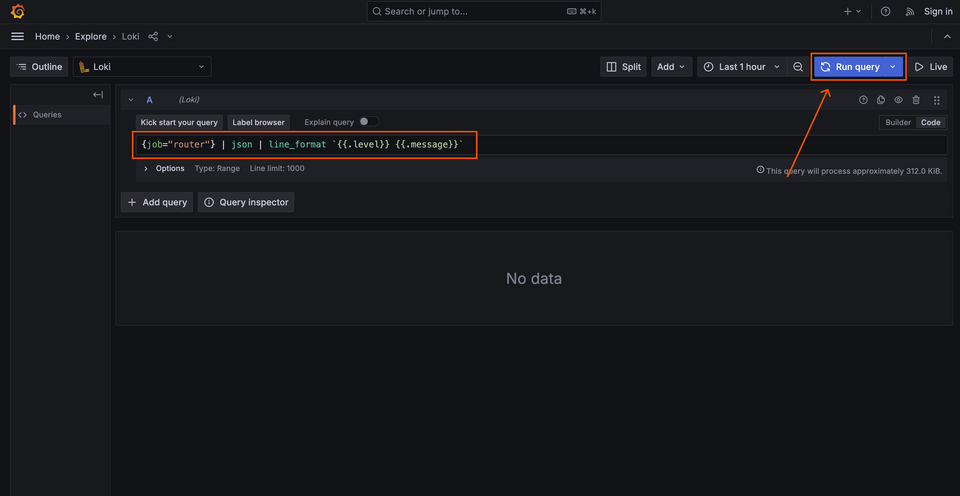

This gives us an input where we can write our own query. Let's copy in the following query, which gives us all of the logs from the router, and click the blue Run query button.

{job="router"} | json | line_format `{{.level}} {{.message}}`

Here's what this query does.

- First, it fetches all log lines that match the filter of

{job="router"}. - Next, it extracts keys and values from log lines formatted as

json, and uses them as labels. - Finally, the log line is replaced using a specific

line_format; specifically, with the errorlevel, followed by themessage.

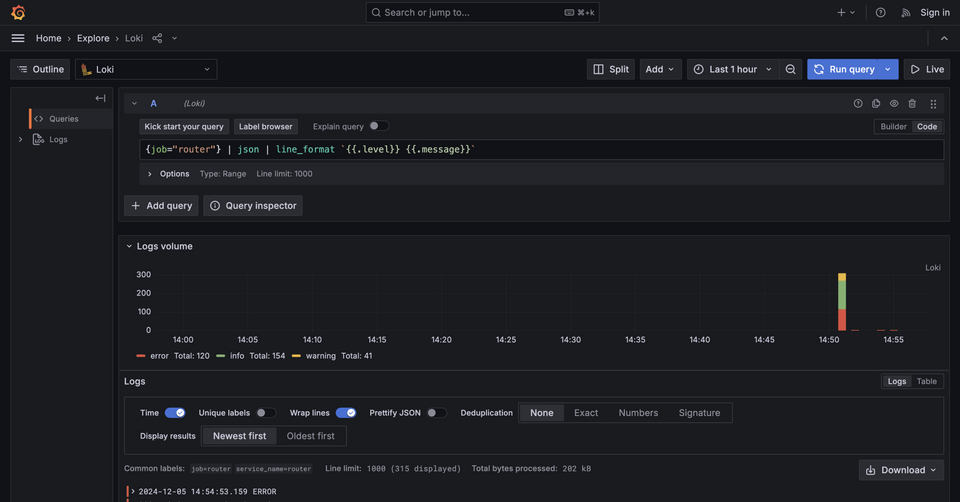

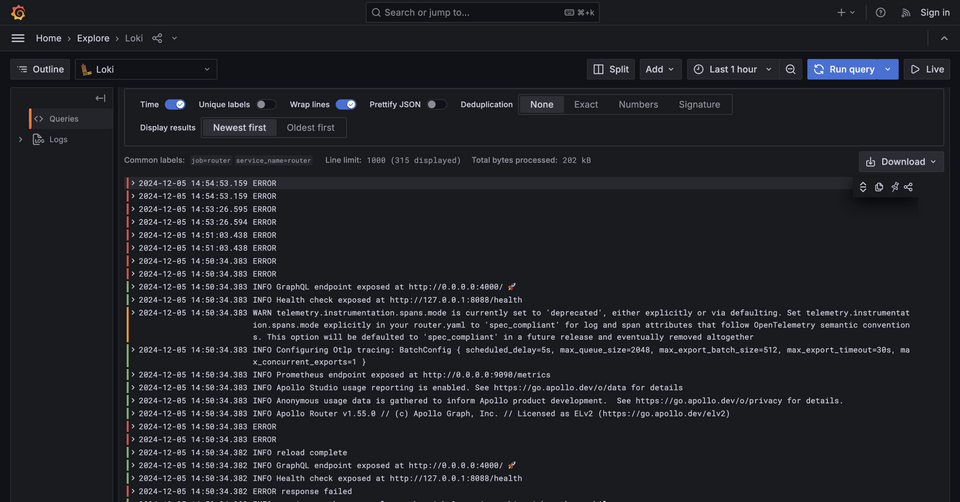

We see some errors in the logs:

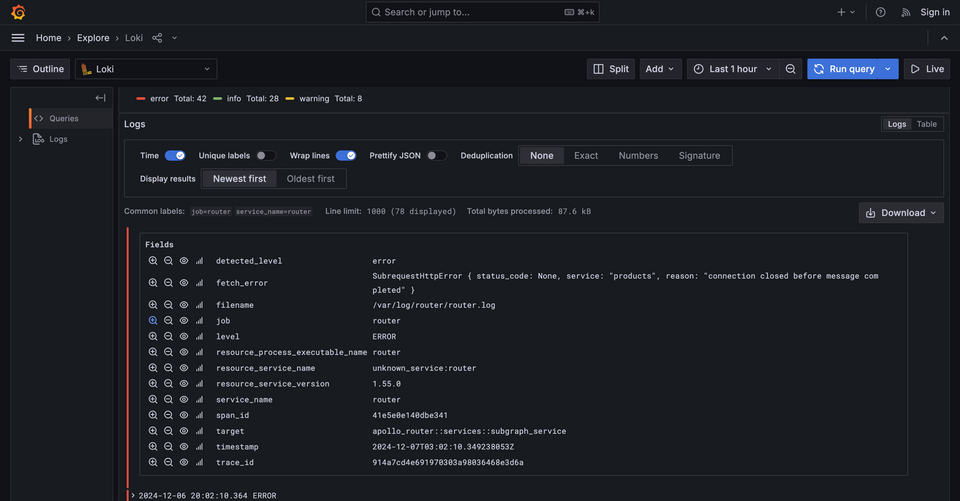

You can click into each error for greater detail, such as the error message and the service it originated in.

We can also explore the different logs by category, and select info, warning, or error from the menu on the left.

Practice

Drag items from this box to the blanks above

architecture

trace

metric dashboard

performance

span

compromised security

service

a bottleneck in performance

Key takeaways

- Traces are visual depictions of how a particular request moves through our system. We can see a breakdown of time spent in various services, and the different stages of work completed.

- Each block of work represented in a trace is called a span.

- Logs are text-based messages outputted by a system. We can inspect logs to trace where an error occurred, or identify the details of a request's path through our system.

Up next

We've touched on metrics, traces (and spans), and logs; and with some high latency rates, and a few lengthy traces, we're on the right track. In the next lesson, we'll dissect the data we gathered and begin making some fixes.

Share your questions and comments about this lesson

This course is currently in

You'll need a GitHub account to post below. Don't have one? Post in our Odyssey forum instead.