Overview

Let's begin our investigation in the Grafana dashboard connected to our router.

In this lesson, we will:

- Identify our primary metrics for monitoring service health

Exploring Grafana

With your Docker process running, we'll jump back over to our Grafana router dashboard.

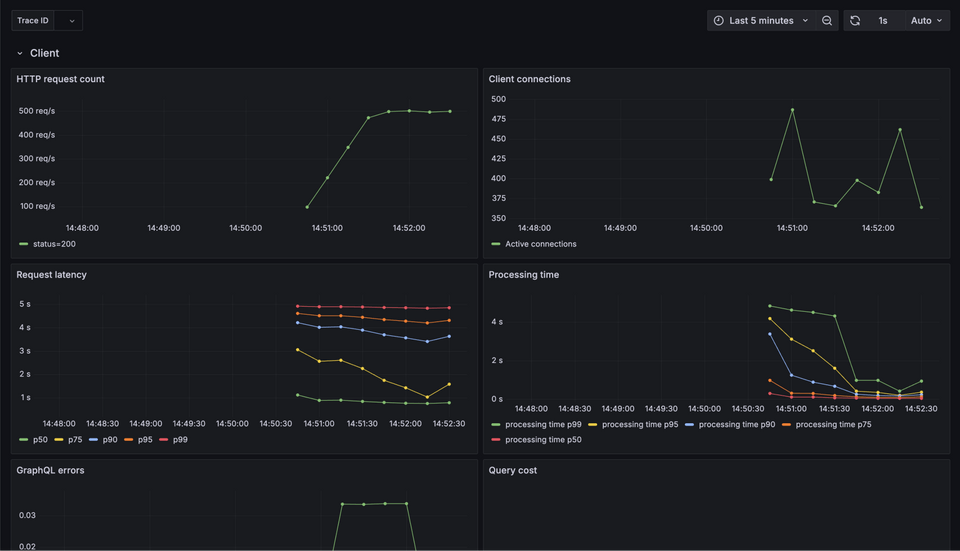

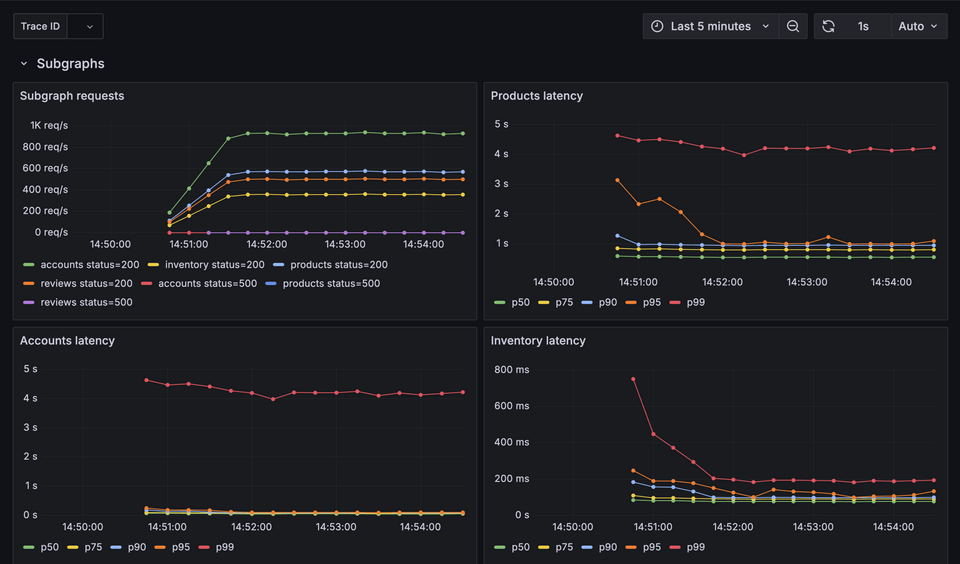

Already we should see that there's a lot happening here. Our router is receiving requests and routing them across our federated API to be resolved by the appropriate subgraph. The dashboard is composed of several different metrics we're tracking, sorted into a few discrete categories:

- Client: Metrics concerned with client experience, client requests, and latency.

- Subgraphs: Metrics specific to our underlying subgraph services. We should see a panel for latency in each of our four subgraphs.

- Query planner: Various metrics from monitoring the router's query planner mechanism, which devises the router's "instructions" to follow to various queries

Take a few moments to scroll through this dashboard. Yours likely won't look exactly like ours, but you should see some data coming through.

Let's highlight some of the most important data here.

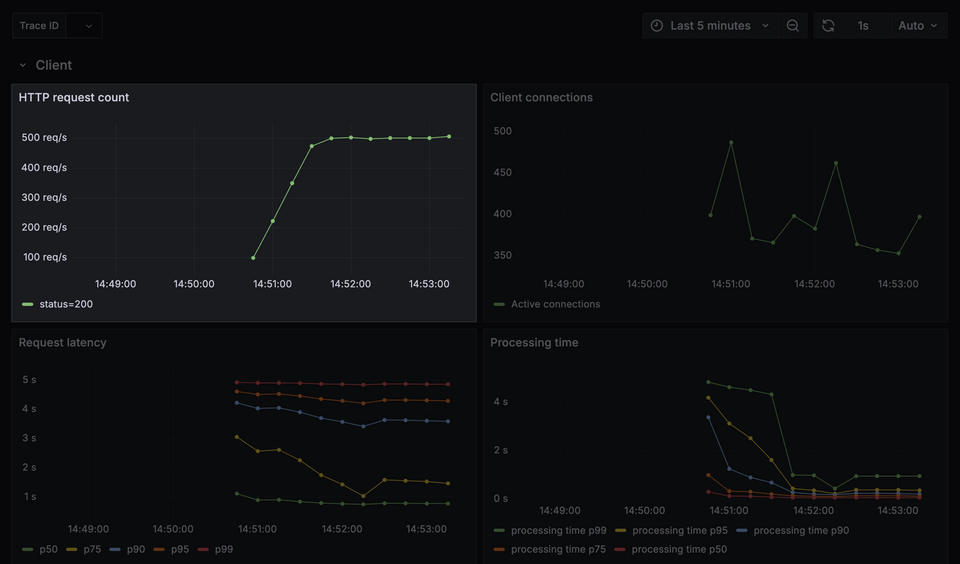

HTTP request count

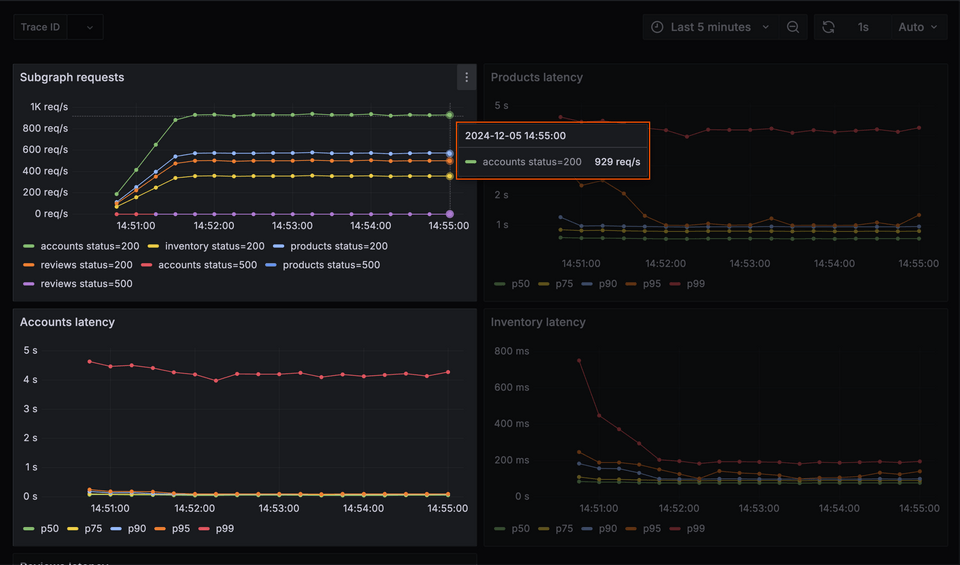

The first panel under the Client section, HTTP request count shows us the number of requests the router receives per second. This number fluctuates slightly over time, but we should see our line approaching 500 requests per second. Hover over any of the plotted points to see their timestamp, along with the specific number of requests received at that time.

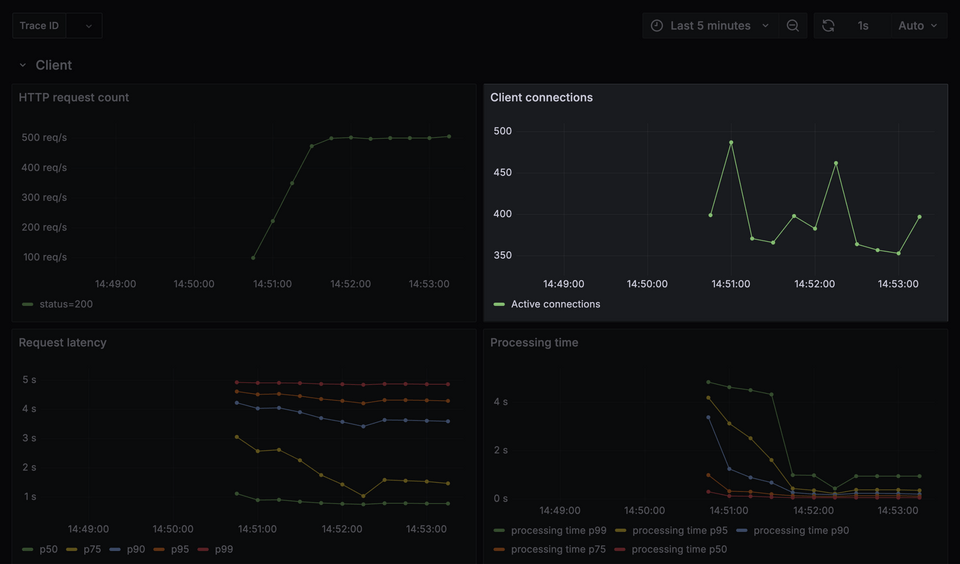

Client connections

The next panel reflects how many active client connections we have over time.

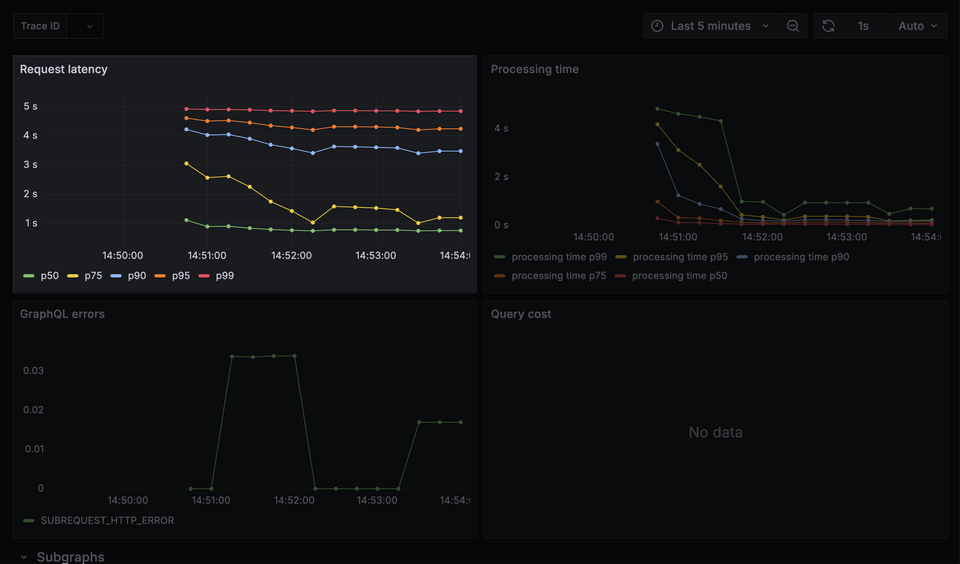

Request latency and processing time

Jumping down to the next row, we'll see two panels with data plotted in percentiles, Request latency and Processing time.

Request latency reflects how values are distributed across different percentiles (p50, p75, p90, p95, p99).

Here's how we break this down. (Note that the following are just example values; your dashboard may reflect something different for each percentile.)

| Percentile | Value | Significance |

|---|---|---|

| p50 | 0.50s | 50% of requests execute in 0.5 seconds or less |

| p75 | 1.50s | 75% of requests execute in 1.5 seconds or less |

| p90 | 3.75s | 90% of requests execute in 3.75 seconds or less |

| p95 | 4.50s | 95% of requests execute in 4.5 seconds or less |

| p99 | 4.95s | 99% of requests execute in 4.95 seconds or less |

Taken another way, this means that the majority of requests in our system take anywhere from 0.5 seconds to 5 seconds to be executed.

Under Processing time, we'll see something similar. This panel shows us how long it takes the router to complete its processing-related tasks for most requests.

When the majority of our queries are taking multiple seconds to respond, we're left with a less-than-great user experience. Let's jump down to the Subgraphs section and get a more granular look at our services.

Subgraph requests and latency

Scroll down in your Grafana dashboard until you've reached the Subgraphs category. Spend a moment comparing the different latency panels for each of the four subgraphs we're working with.

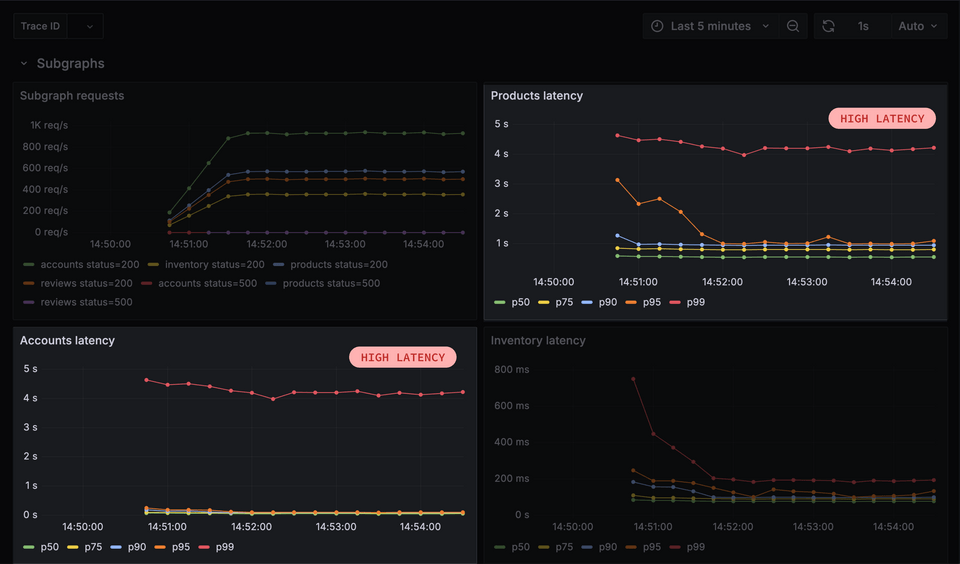

See anything interesting? The latency rates for both the products and accounts subgraphs are consistently high—with 99% of requests resolving in about 5 seconds or less—while inventory and reviews have spikes every now and then, but otherwise maintain reasonable levels.

A closer look shows that our accounts service has a consistent p99 value of five seconds, and receives close to 1000 requests per second!

That's a good start—we've taken a look at some key data points, and identified where latency is higher than we'd like. But we'll gather some more information before making any changes!

Practice

Key takeaways

- The GraphOS router exposes a wide variety of metrics that can be represented in a data dashboard, such as: client requests, request latency, processing time, query planner performance, and subgraph requests.

- Some metrics are represented as values distributed across different percentiles (such as p50, p75, p99). We use these distributions to visualize how a particular component of our system performs at various percentages. We can see, for example, how long it takes 50% of our requests to resolve (p50); and compare this to how long it takes 99% of our requests to resolve (p99). This gives us a general idea of latency across the board, and where improvements might need to be made.

Up next

Time series charts are super useful, but they're one piece of the puzzle in diagnosing performance issues. Next, we'll take a look at system traces and logs.

Share your questions and comments about this lesson

This course is currently in

You'll need a GitHub account to post below. Don't have one? Post in our Odyssey forum instead.