Overview

The router does a great job of handling all of our requests and delegating each piece to the subgraph responsible, but there's a lot more than meets the eye happening under the hood. Let's take a look at the overall router processing time, and see what we can do to speed things up.

In this lesson, we will:

- Inspect traces to chart a query's path to execution

- Review metrics for the router's query planner

- Discuss caching options for query plans and query plan requests

Router processing time

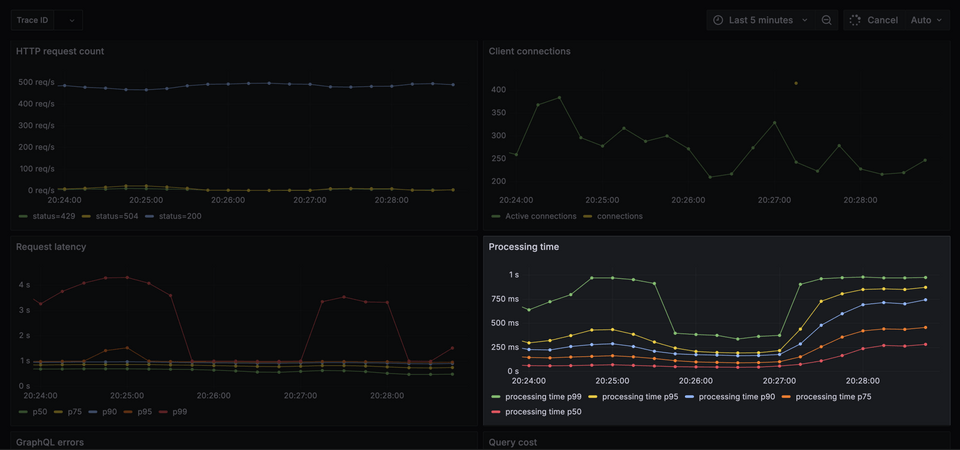

Returning now to the client-side, something looks curious:

This graph shows the actual time the router spends processing a request.

Most of a client request's latency lies in subgraph requests. But there are some CPU-intensive tasks performed in the router, and the time they take is included in the metrics here. We can see that total processing time nearly approaches 1s in places, which is a lot of overhead for the router.

This can become especially problematic as time spent processing one request can easily affect other requests waiting for their turn to be processed.

Inspecting router tasks

In general, the router adds very low overhead to requests. Its main sources of busy work are:

- Query planning

- GraphQL response validation and formatting

- GraphQL query parsing and validation

- Telemetry

- JSON serialization and deserialization

We can use traces to figure out which task in particular might be affecting our processing time. Jump back over to Explore in Grafana, and make sure that you've selected Tempo from the dropdown. (Recall that we can run our query for traces by entering opening and closing curly braces ({}) in the query input!)

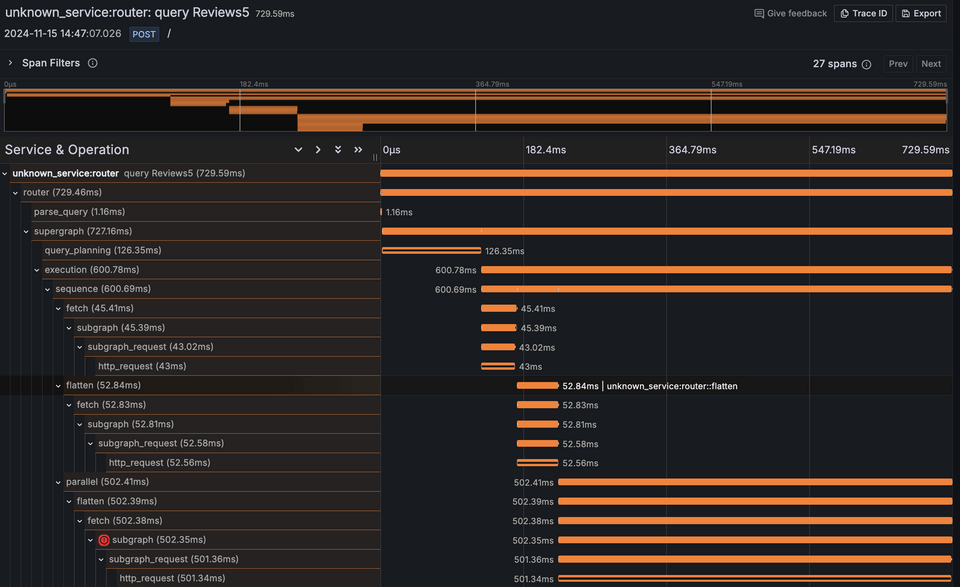

Let's take a look at a trace for one of our more robust queries. Look for a query like query Reviews5.

A few things stand out:

- of the 729ms required to execute the query, we're waiting about 43ms for one

subgraph_request; then 52ms for another; and finally 502ms for a third one. So most of the time involved here is spent waiting for subgraph requests. - 5th from the top, the

query_planningspan is the time spent generating a query plan. This takes about 126ms.

Even to plan out a query, 126ms seems high. This will certainly add a lot of overhead to the request, passing that latency onto subsequent requests waiting for processing. So what happens if this latency stacks up, and many more queries spend a lot of time in planning? That additional latency would continue to compound quickly for all requests.

Query planner metrics

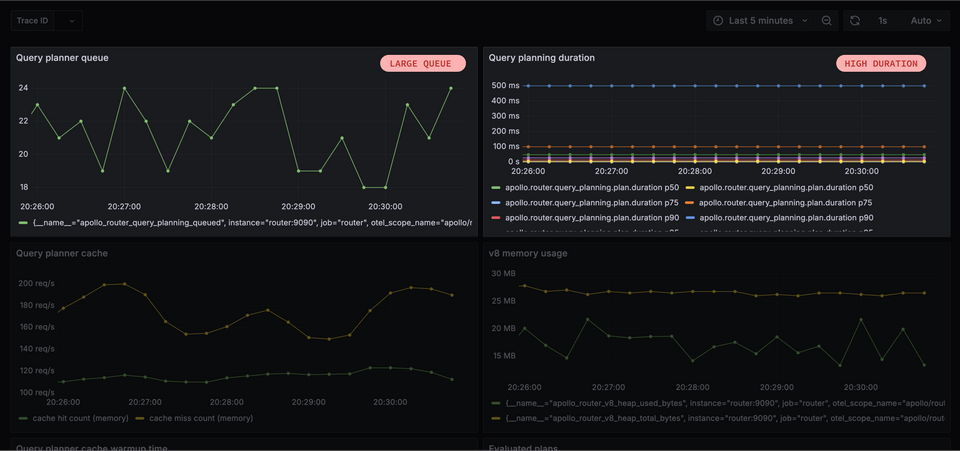

Let's return to our metrics dashboard. We can check out how the query planner is behaving in the panels at the bottom.

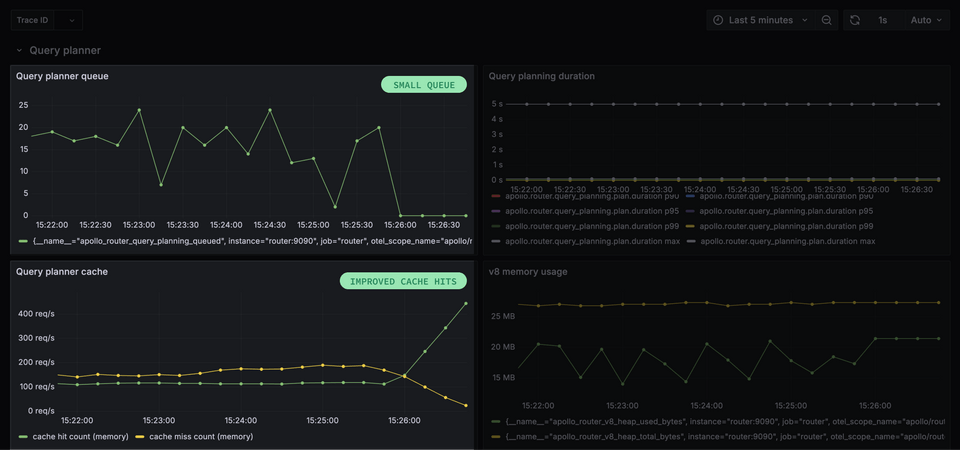

The Query planning duration panel shows the time spent planning, and we can see that it can get as high at 500ms, but depending on your computer it might approach one to five seconds. We also have a panel charting the size of the query planner queue, often approaching 24 queries that require a plan to be generated.

And at last, hit and miss metrics for the query planner cache, where we see between 150-220 requests per second hitting a cache miss.

To really make sense of these metrics, we need to understand how the router handles query planning.

Query planning and the cache

Query planning is an expensive task (usually taking between 1ms and 1s); because generating plans can greatly impact request processing time, the router caches its query plan results in memory.

Query plans in particular are a great candidate for caching. The process of generating a query plan is highly deterministic: it depends on the query, operation name, schema, router configuration, and often some plugin-specific information, such as authorization. So if we've already generated the query plan for a particular query, we don't need to repeat the process when the router receives the same query again; we can just reuse the query plan.

The router also works on reducing the query planning workload by deduplicating requests to the planner itself. If the router receives multiple identical queries, it generates the query plan for just one, and passes the generated plan to the others. The router also limits the footprint of the planner by limiting it to one CPU core by default.

To explore additional configuration options, check out the official router documentation on query planner pooling.

Caching in-memory is a great option, but it doesn't scale to multiple router instances. A query plan generated by one router isn't automatically accessible by other routers that might receive identical requests. To allow multiple router instances to share the plans they have calculated, we also have the option to cache query plans in a Redis database.

Improving cache performance

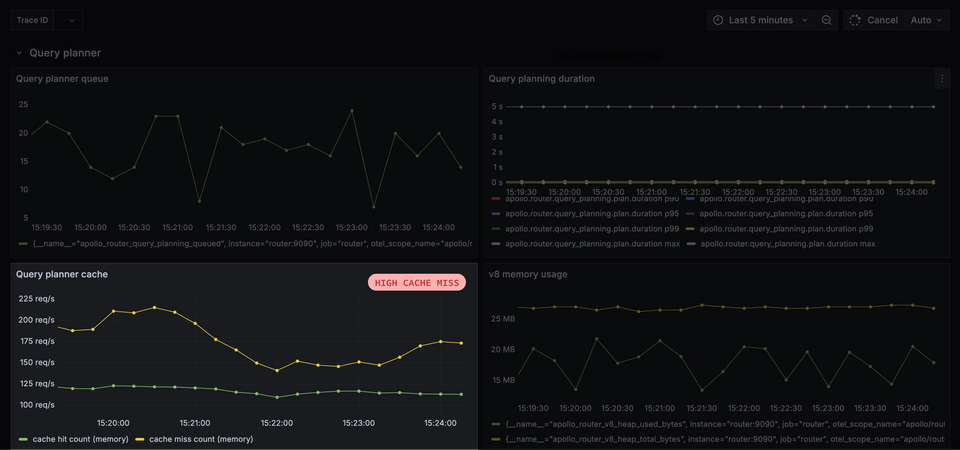

Returning to our Grafana dashboard, we should now have a better understanding of what's happening in the query planner.

- Our data indicates many cache misses. This means the router is spending a lot of time calculating query plans.

- We have many different queries coming in, so the query planning queue continues to grow. Each query has to wait for its turn to be planned, increasing latency.

- Planning time is high, between 10ms and 100ms.

It's highly likely that the router is spending most of its CPU time calculating query plans. We've seen something like this before; when we introduced timeouts for client requests in an earlier lesson, we applied a timeout value of 100ms—but still saw a higher execution time than expected. This was caused by the CPUs being occupied. If a request came in with a timeout of 100ms, but needed to wait 200ms to get some time with the CPU, then the timeout would trigger too late.

Let's return to router.yaml and tweak our settings. At the top of the file, we'll see the supergraph key, along with a section all about query_planning.

supergraph:introspection: truelisten: 0.0.0.0:4000query_planning:cache:in_memory:limit: 10

Right now we'll see that our in_memory cache is set with a limit of 10 items. And with up to 25 queries in our query planning queue, we're not giving our in-memory cache enough space to reuse the plans the router has already generated. Let's increase the capacity to 50 and see what happens.

query_planning:cache:in_memory:limit: 50

What a difference!

The effect is nearly immediate: cache misses drop to 0, and the queue size does too. Processing time has also dropped somewhat, while p50, p75 and p90 client latencies decrease.

We'll also notice the rate limiting errors increasing too. This is due to an increase in requests to the accounts subgraphs, because now the router can spend more time executing subgraph requests.

Looking to go deeper with the cache? Check out the official documentation for more on both in-memory and Redis-based caching.

Practice

Key takeaways

- As part of its "processing time", the router takes care of tasks such as query planning, query parsing and validation, response validation and formatting, telemetry, and serialization.

- The router emits various query planner metrics, such as how many queries are in the queue, the duration of planning queries, and cache hits and misses.

- We can cache query plans as well as requests to the planner, furthe reducing the processing time in the router for a query it has already handled before.

- The

query_planningproperty in the router configuration file allows us to specify the number of query plans the router should hold in its in-memory cache.

Up next

We've used timeouts, rate limiting, query deduplication, and increased the capacity of our query plan cache. In the next lesson, we'll explore a new feature that allows us to serve up subgraph responses—without bothering the subgraph more than once!

Share your questions and comments about this lesson

This course is currently in

You'll need a GitHub account to post below. Don't have one? Post in our Odyssey forum instead.