Overview

We've gotten a bit more control over our traffic; now let's add the final touches to our infrastructure to get back to cruising speed!

In this lesson, we will:

- Scale back our rate limits to permit more traffic

- Learn about how subgraph entity caching improves performance

- Enable a locally-running Redis database as our entity cache

Removing rate limits

Let's start by raising the rate limit for the accounts subgraph to 800.

traffic_shaping:router:timeout: 5sglobal_rate_limit:capacity: 500interval: 1sall:timeout: 500mssubgraphs:accounts:timeout: 100msglobal_rate_limit:capacity: 800interval: 1sdeduplicate_query: trueproducts:deduplicate_query: true

This shouldn't impact accounts request latency, since we're deduplicating identical queries, but we will see an increase in the number of requests accounts receives.

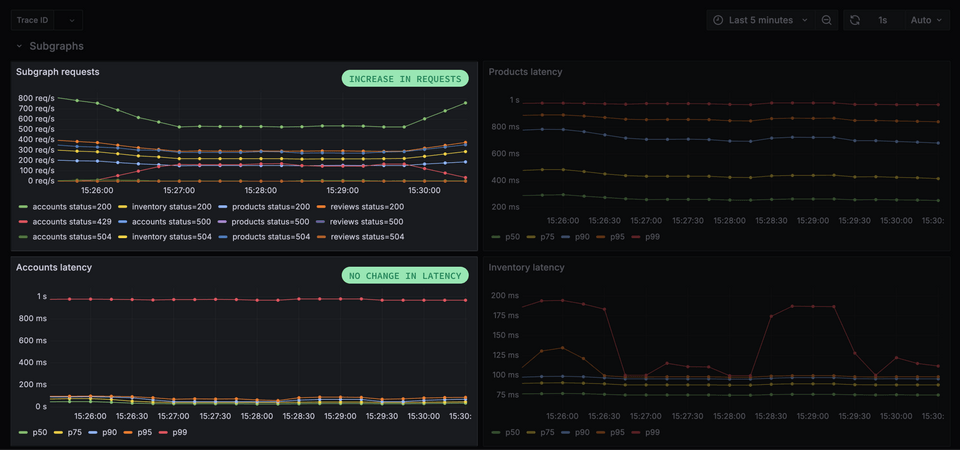

Successful requests to accounts increase to 800 RPS, rate limiting errors decrease, timeout errors increase slightly. This is due to the 500ms timeout still affecting all subgraphs.

Everything works well enough, except for the partial responses containing timeout errors. The 500ms timeout is all right for all subgraphs, except products. But if we try to increase the timeout for this one, client request latency will increase right away. The performance of this subgraph has a huge impact on our requests. What can we do to improve that?

Subgraph entity caching

Since version 1.40.0, the router has supported the subgraph entity caching feature.

A single client request can trigger any number of smaller requests across the backend; and the vast range of data that can be returned (private and public, low and high TTL) makes federated GraphQL requests far from optimal when working with classic client-side HTTP caches.

Entity caching solves this problem by enabling the router to respond to identical subgraph queries with cached subgraph responses. The cached data is keyed per subgraph and entity, which allows client queries to access the same entries of previously-cached data. This also means that the subgraphs receive fewer redundant requests—data that has already been requested and cached once before can be used to satisfy subsequent requests down the line.

To improve the performance of the products subgraph—and minimize the number of redundant requests it's currently being served—we'll enable the entity cache. This feature requires a Redis database, which we fortunately already have running within our Docker process.

Enabling entity caching

Back to router.yaml! We're going to add a new top-level key, preview_entity_cache. Here, we need to do a few things: first, enable the feature. Next, specify where Redis is and how it's configured. Lastly, we need to specify which subgraphs this should apply to.

Here's the syntax.

preview_entity_cache:enabled: truesubgraph:all:enabled: falseredis:urls: ["redis://redis:6379"]timeout: 5ms # Optional, by default: 2msttl: 10s # Optional, by default no expirationsubgraphs:products:enabled: true

Again, the effect of our change should be immediate!

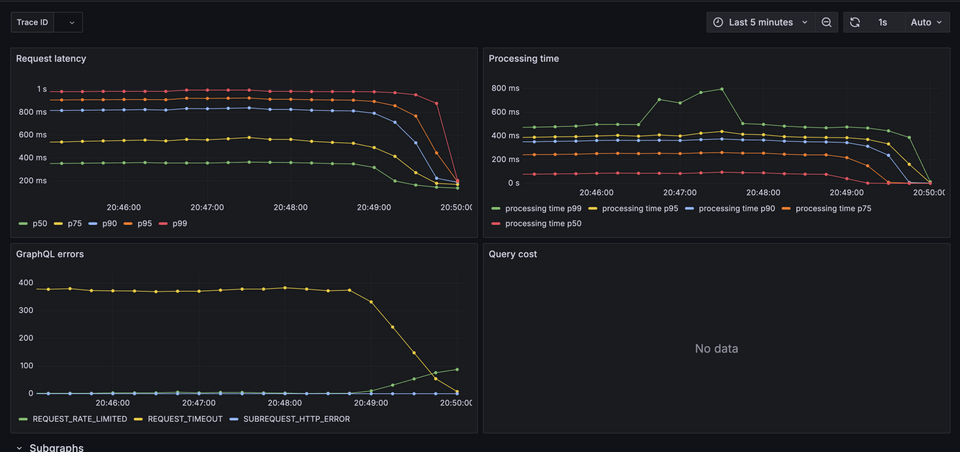

Timeout errors disappear, and client request latency drops with p99 at 220ms. Processing time for the router also drops: it has a lot less work to do now!

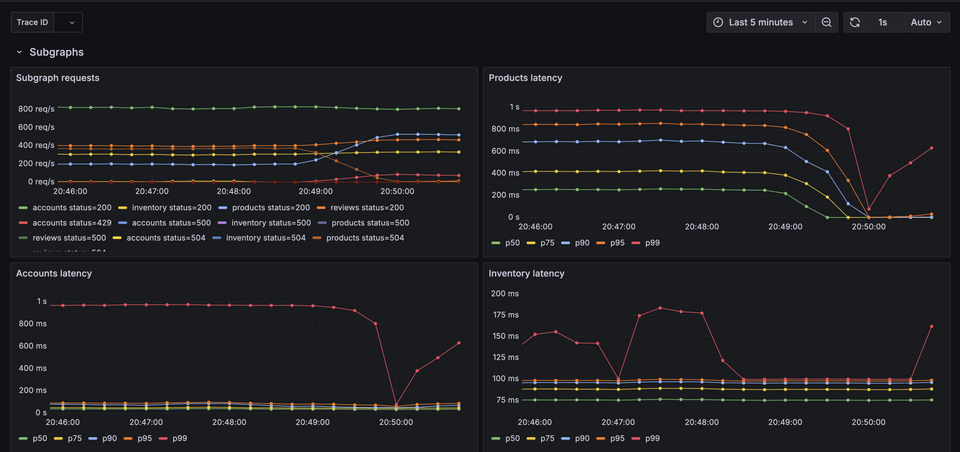

But this effect can be seen most significantly at the subgraph level: the products subgraph's p99 drops from 1s to 100ms. A huge improvement!

Practice

Key takeaways

- Entity caching allows us to cache data keyed per subgraph and entity. This reduces duplicate requests to subgraphs for data that's already been requested once before.

- The router uses Redis to cache data from subgraph query responses.

Journey's end

The router offers a large array of tools to improve performance. But to make a good decision about which tool we need to apply to the current situation, we need a good mental model of federated query execution. Additionally, we always need to keep in mind the intertwined relationship between subgraphs, and navigate those interactions with care.

A federated graph is more than the sum of its parts: it's a live system with various services relying on and affecting each other. With a bit of configuration, it becomes a highly scalable and resilient API that will cover all your needs!

Thanks for joining us in this Odyssey course—we look forward to seeing you in the next one!

Share your questions and comments about this lesson

This course is currently in

You'll need a GitHub account to post below. Don't have one? Post in our Odyssey forum instead.